Ghost in the machine

Pakistan, grappling with the absence of a personal data protection law for over four years, now faces a bigger issue in the shape of a rapidly evolving digital ecosystem dominated by generative artificial intelligence (AI).

The challenge for the government lies in crafting policies to ensure fair and responsible AI for consumers – a global issue that may not have an adequate national response.

Recent breakthroughs in generative AI have taken the digital world by storm. Its adoption by consumers has grown rapidly, and the technology is set to have an enormous impact on the way we work, create, communicate, gather information, and much more.

With this context, this year’s World Consumer Rights Day (March 15) was also marked by a global conversation for fair and responsible AI for consumers.

The discussions were spearheaded by Consumer International (CI), an umbrella body of 200 consumer organisations from 100 countries worldwide.

CI says: “There is a real opportunity here. Used properly, generative AI could enhance consumer care and improve channels of redress. However, it will also have serious implications for consumer safety and digital fairness. With developments taking place at breakneck speed, we must move quickly to ensure a fair and responsible AI future.”

World Consumer Rights Day highlighted concerns like misinformation, privacy violations and discriminatory practices, as well as how AI-driven platforms can spread false information and perpetuate biases.

The questions posed by CI for building the foundations for genuine transparency and trust include: What measures are needed to protect consumers against deep fakes and misinformation? How do we ethically navigate the collection and use of consumer data? And who is responsible when a person is harmed by generative AI?

The global debate on AI has ranged from how generative AI may be used to increase efficiency in the workforce and spark creativity to how it can be used to spread disinformation, manipulate individuals and society, displace jobs, and challenge artists’ copyrights.

It also revolves around the question of how to adequately protect consumers from techniques that impair a person’s ability to make an informed decision and outlines those principles that are applicable to all AI systems.

Many consumer groups are working on the issue. The Norwegian Consumer Council in its report ‘Ghost in the Machine: Addressing the consumer harms of generative AI’ says that the discussion about how to control or regulate these systems is ongoing, with policymakers across the world trying to engage with the promises and challenges of generative AI.

Though they do not have the answers to all the questions raised by generative AI, many of the emerging or ongoing issues can be addressed through a combination of regulation, enforcement, and concrete policies designed to steer the technology in a consumer and human-friendly direction.

AI-powered systems in consumer-facing applications are fast approaching, with the mass deployment and adoption of generative AI systems. Generative AI is a subset of artificial intelligence that can generate synthetic content such as text, images, audio, or video, which can closely resemble human-created content. Such systems are poised to change many of the interfaces and content consumers meet today.

As technology is developed and adopted, generative AI may be used to automate tedious and time-consuming processes that previously had to be done manually, for example by writing concise texts, filling in forms, generating schedules or plans, or writing software code.

It has the potential to make services more cost-efficient, which may also lower costs for consumers, for example when soliciting legal advice.

The proliferation of low-cost AI-generated content may replace human labour and human-generated content, thus lowering the quality of consumer-facing services such as customer support. The technology also opens new avenues for consumer manipulation in areas such as advertising or product recommendations and can facilitate obfuscating discriminatory practices.

It is possible to use AI with reduced risks and increased benefits. However, for this to happen, these technologies must be programmed to follow ethical standards and respect for human rights.

“We also strongly urge governments, enforcement agencies, and policymakers to act now, using existing laws and frameworks on the identified harms that automated systems already pose today. New frameworks and safeguards should be developed in parallel, but consumers and society cannot wait for years while technologies are being rolled out without appropriate checks and balances,” argues the Norwegian Consumer Council.

The BEUC, a Belgian consumer protection organization, calls on the European Parliament to improve the draft AI Act that the datasets used to train generative AI systems need to be subject to important safeguards such as measures to prevent and mitigate possible biases.

“Consumers should be made aware that they are interacting with a generative AI system. Deployers of generative AI in consumer-facing interfaces and services should have to disclose how the generated content is influenced by commercial interests. There must be clear rules on accountability and liability when a generative AI system harms consumers. And generative AI systems should be auditable by independent researchers, enforcement agencies, and civil society organisations, such as consumer organisations”, the recommendations add.

In healthcare, too, large multimodal models (LMMs) – a type of fast-growing generative AI technology – are being used. LMMs can accept one or more types of data inputs, such as text, videos, and images and generate diverse outputs not limited to the type of data inputted.

LMMs are unique in their mimicry of human communication and ability to carry out tasks they were not explicitly programmed to perform. LMMs have been adopted faster than any consumer application in history, with several platforms such as ChatGPT, Bard and Bert entering the public consciousness in 2023.

The World Health Organization (WHO) has also developed over 40 new guidance and recommendations on the ethics and governance of LMMS for consideration by governments, technology companies, and healthcare providers.

In one set of recommendations for developers of LMMs, the WHO says that they should ensure that LMMs are designed not only by scientists and engineers, but potential users and all direct and indirect stakeholders, including medical providers, scientific researchers, healthcare professionals, and patients, should also be engaged from the early stages of AI development in structured, inclusive, transparent design and given opportunities to raise ethical issues, voice concerns and provide input for AI application under consideration.

LMMs are designed to perform well-defined tasks with the necessary accuracy and reliability to improve the capacity of health systems and advance patient interests. Developers should also be able to predict and understand potential secondary outcomes.

While the world is developing the regulatory framework on AI, it is imperative for Pakistani policymakers to incorporate consumer-friendly principles in the existing regulations that might be used to curb AI misuse.

The writer works on consumer protection policy and regulations, and is a member of the 19-member advisory council of Consumer International. He can be reached at: nadympak@hotmail.com

-

King Offers Harry, Meghan Markle A 30 Bedroom Lodge Despite Its Decades Of Baggage: ‘it’s An Olive Branch’

King Offers Harry, Meghan Markle A 30 Bedroom Lodge Despite Its Decades Of Baggage: ‘it’s An Olive Branch’ -

Selma Blair Talks About How Her Debilitating Disease Is 'misunderstood'

Selma Blair Talks About How Her Debilitating Disease Is 'misunderstood' -

China’s 5-year Tech Strategy: What To Expect At Annual Parliament Meeting Amid Rivalry With West

China’s 5-year Tech Strategy: What To Expect At Annual Parliament Meeting Amid Rivalry With West -

Andrew’s Total Meltdown On The Day Of Eviction: Insider Breaks It Down Word For Word

Andrew’s Total Meltdown On The Day Of Eviction: Insider Breaks It Down Word For Word -

Michael J. Fox Stuns Actor Awards Audience With Rare Confession Amid Parkinson's Disease

Michael J. Fox Stuns Actor Awards Audience With Rare Confession Amid Parkinson's Disease -

Beatrice’s In-laws Stand Against Her Marriage: ‘Furious Their Son Is Wrapped Up In Wreckage’

Beatrice’s In-laws Stand Against Her Marriage: ‘Furious Their Son Is Wrapped Up In Wreckage’ -

Jessie Buckley Utters 'wild' Remarks For 'Hamnet' Co-star Emily Watson At Actor Awards

Jessie Buckley Utters 'wild' Remarks For 'Hamnet' Co-star Emily Watson At Actor Awards -

Who Could Replace Ayatollah Ali Khamenei? Iran’s Top Successor Candidates Explained

Who Could Replace Ayatollah Ali Khamenei? Iran’s Top Successor Candidates Explained -

Oliver 'Power' Grant Cause Of Death Revealed

Oliver 'Power' Grant Cause Of Death Revealed -

Michael B. Jordan Makes Bombshell Confession At Actor Awards After BAFTA Controversy: 'Unbelievable'

Michael B. Jordan Makes Bombshell Confession At Actor Awards After BAFTA Controversy: 'Unbelievable' -

Prince William Willing To Walk Road He ‘loathes’ For ‘horror Show’ Escape: ‘He’s Running Out Of Allies Fast’

Prince William Willing To Walk Road He ‘loathes’ For ‘horror Show’ Escape: ‘He’s Running Out Of Allies Fast’ -

Pentagon Says No Evidence Iran Planned Attack On US, Undercutting Strike Justification

Pentagon Says No Evidence Iran Planned Attack On US, Undercutting Strike Justification -

Prince William’s Changes Priorities With Harry After Kate Middleton’s Remission: ‘It Couldn't Be Worse’

Prince William’s Changes Priorities With Harry After Kate Middleton’s Remission: ‘It Couldn't Be Worse’ -

Justin Bieber Gets Touching Tribute From Mom Pattie Mallette On Turning 32 Amid Limited-edition Birthday Drop

Justin Bieber Gets Touching Tribute From Mom Pattie Mallette On Turning 32 Amid Limited-edition Birthday Drop -

Jada Pinkett Smith Details How Her Memoir Combats 'shame' Around Alopecia

Jada Pinkett Smith Details How Her Memoir Combats 'shame' Around Alopecia -

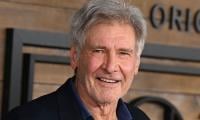

Harrison Ford Reflects On Career As He Receives Life Achievement Award At 2026 Actor Awards

Harrison Ford Reflects On Career As He Receives Life Achievement Award At 2026 Actor Awards