AI may complicate accountability in medical errors, experts warn

The rise of artificial intelligence in healthcare could create a complex blame game

A growing concern among legal and medical experts is that the integration of Artificial Intelligence (AI) into healthcare creates a blame game, making it significantly harder to establish liability for medical failings.

The development of AI for clinical use has sparked widespread change, and researchers are constructing tools ranging from algorithms that evaluate scans to systems that aid with diagnoses.

AI is also being developed to provide support in managing hospital tasks, from maximizing bed utilization to optimize supply chains.

Despite the innumerable advantages for healthcare, experts are quite concerned about the effectiveness of AI tools and are raising questions about who is responsible for a patient who experiences a negative outcome.

The Jama summit on Artificial Intelligence hosted last year by the Journal of the American Medical Association, brought together a panel of experts including technology companies, regulatory bodies, insurers, lawyers and economists.

The resulting report not only demonstrated the nature of AI tools and the areas of healthcare but also examined the certain challenges including legal concerns.

Professor Glenn Cohen from Harvard law school, a co-author of the report, said that patients could face difficulties while exposing flaws in the use and design of an artificial intelligence product.

Hurdles remain concerning its inner workings and it is quite challenging to both propose a reasonable alternative design for the product and prove that a better outcome was caused by the AI systems.

Another author of the report, Prof Michelle Mello from Stanford Law school, said, “The problem is that it takes time and will involve inconsistencies in the early days, and this uncertainty elevates costs for everyone in the AI innovation and adoption ecosystems.”

The report outlines that there are many barriers to evaluating AI tools, making full assessments difficult, and emphasizes that new approaches are needed to establish liability for medical failings.

-

Travis Kelce's true feelings about Taylor Swift's pal Ryan Reynolds revealed

-

David Beckham pays tribute to estranged son Brooklyn amid ongoing family rift

-

Brooklyn Beckham covers up more tattoos linked to his family amid rift

-

Jennifer Hudson gets candid about Kelly Clarkson calling it day from her show

-

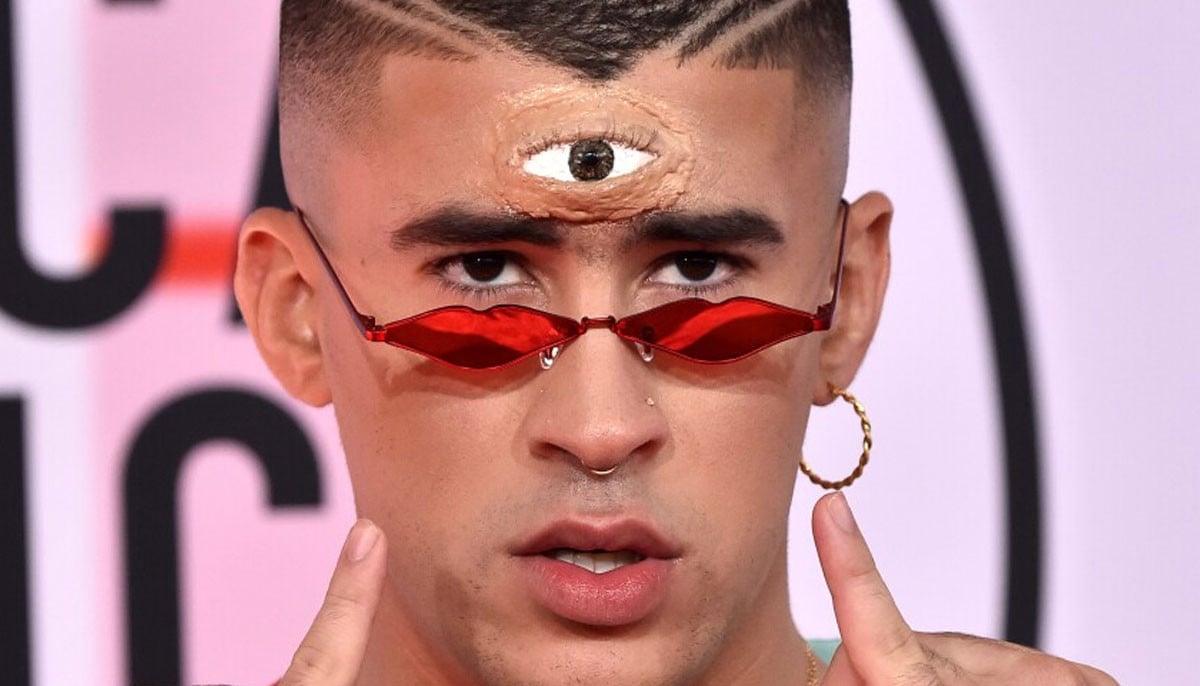

Bad Bunny faces major rumour about personal life ahead of Super Bowl performance

-

Will Smith couldn't make this dog part of his family: Here's why

-

Kylie Jenner in full nesting mode with Timothee Chalamet: ‘Pregnancy no surprise now’

-

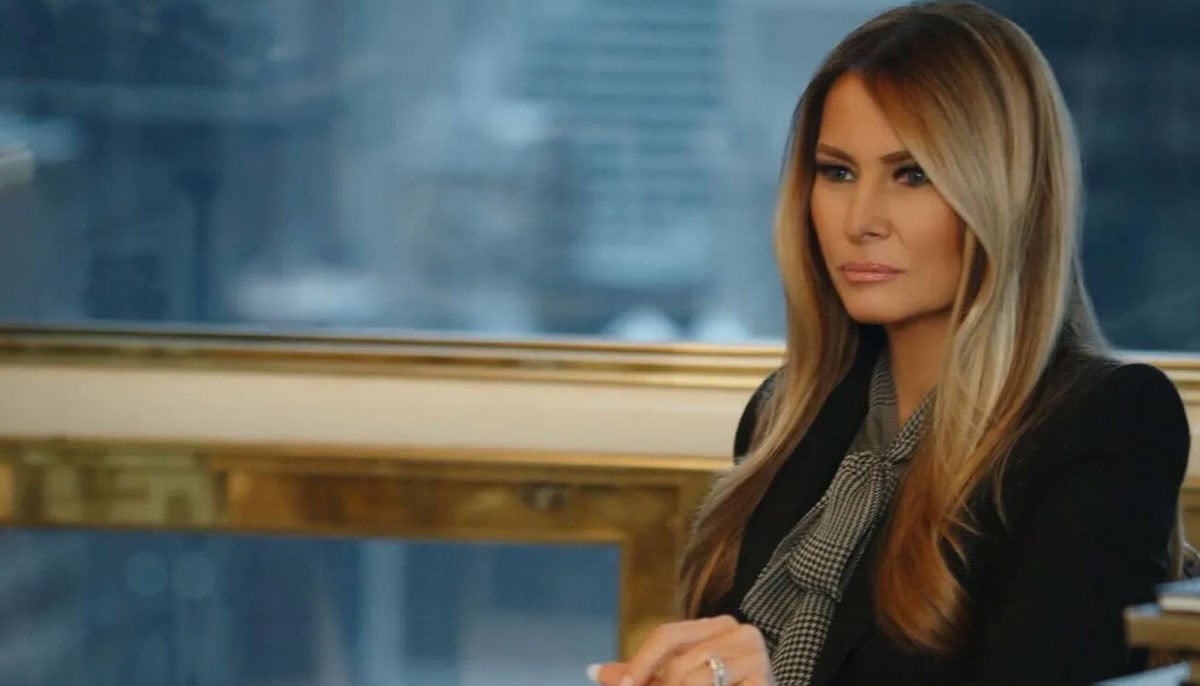

Bianca Censori on achieving 'visibility without speech': 'I don't want to brag'