AI chatbots providing 'flawed' or 'inaccurate' information, study finds

European Broadcasting Union says leading AI assistants such as OpenAI, ChatGPT, Microsoft’s Copilot, Google Gemini and Perplexity are misrepresenting information

Whether it's fast-paced content generation, improving workplace efficiency, or supporting studies, AI-powered technologies have smartly replaced everyday tasks in almost every sector.

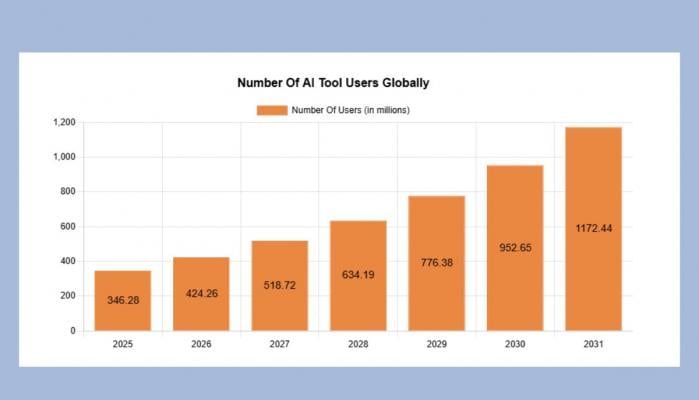

In the latest report published by Digitalsilk on September 30, 2025, data shows that over 1.1 billion people are expected to use AI by 2031, making it one of the fastest-adopted technologies in history.

Digital innovation has completely transformed our lives through Artificial intelligence. Still, experts find that it may not be as efficient as it seems, and we cannot rely on much of the content generated by AI as a reliable source of accurate information.

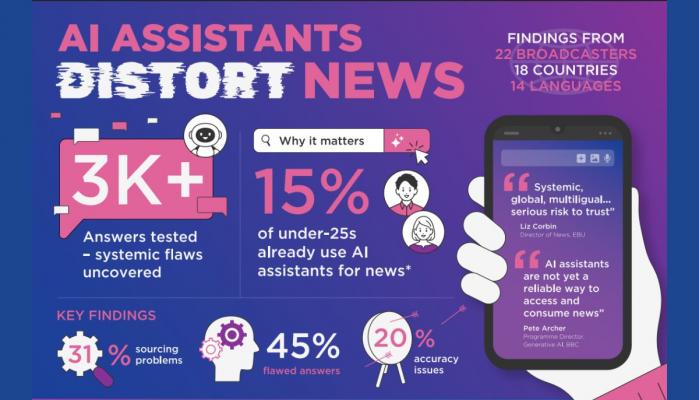

In the latest publication, "News Integrity in AI assistants”, released on Wednesday, October 22, 2025, the European Broadcasting Union (EBU) explains that leading AI assistants are misrepresenting news information.

In the report, EBU underlined that AI chatbots often provide inaccurate or flawed information about major news events.

The international research analyzed responses to questions about news content from leading AI assistants and software applications that use AI to understand natural-language commands and complete tasks for users.

With reference to that, the EBU tested four of the most widely used AI assistants, including OpenAI’s ChatGPT, Microsoft’s Copilot, Google’s Gemini, and Perplexity.

Moreover, the report released by EBU is one of the most extensive cross-market evaluations of its kind, including 22 public service media organizations in 18 countries – working in 14 languages – and assessing how these AI bots respond to user commands to disseminate news and current affairs.

The results found that most of the answers provided by AI platforms about news events were confused with parodies, gave wrong dates, or simply got the invention or discovery timeline wrong.

Artificial intelligence is the computational capability to perform human-related tasks associated with human intelligence, such as reasoning, problem-solving, perception, and decision-making.

Furthermore, the EBU's AI research was built on an earlier study conducted by the BBC, which exposed inaccuracies and errors in AI assistants' output.

In the same context, a team of researchers at EBU explored whether those flaws had been addressed or identified, but the study found the results alarming: the AI routinely misrepresents content or other information, no matter which language, territory, or AI platform is tested.

The report's key findings reveal that almost half of all AI responses had at least one common issue, indicating serious sourcing problems. In contrast, one out of every five answers “contained major accuracy issues, including hallucinated details and outdated information.”

Additionally, the EBU report was initiated, with most professional journalists participating in evaluating AI responses against key criteria, including accuracy, sourcing, distinguishing between opinion and fact, and providing context.

A few other platforms also reported that AI should be restricted or used carefully in fields like medicine and finance due to inaccuracies and unreliable sources.

-

Benny Blanco addresses ‘dirty feet’ backlash after podcast moment sparks online frenzy

-

Here's why Leonardo DiCaprio will not attend this year's 'Actors Award' despite major nomination

-

'The Pitt' producer reveals why he was nervous for the new ep of season two

-

Maggie Gyllenhaal gets honest about being jealous of Jake Gyllenhaal

-

Chris Hemsworth reveals how Elsa Pataky guides his career moves

-

Should Benedict be forgiven for mistress question? 'Bridgerton' star Yerin Ha reveals

-

Kaley Cuoco makes honest comparison of 'Big Bang Theory' and 'Charmed' gigs

-

'View' co-host Sara Haines makes rare confession about married life