California becomes first state to require AI chatbots to confirm they aren’t human

California’s SB 243 sets national precedent for AI chatbot rules

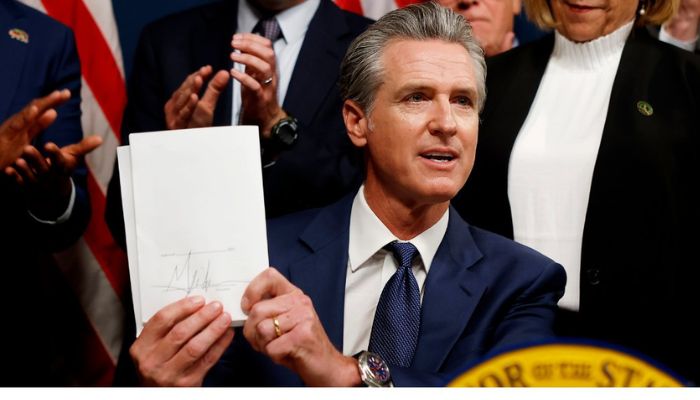

In light of growing concerns over the influence of artificial intelligence (AI) on human behaviour and mental health, California Governor Gavin Newsom has signed Senate Bill 243 (SB 243) into law.

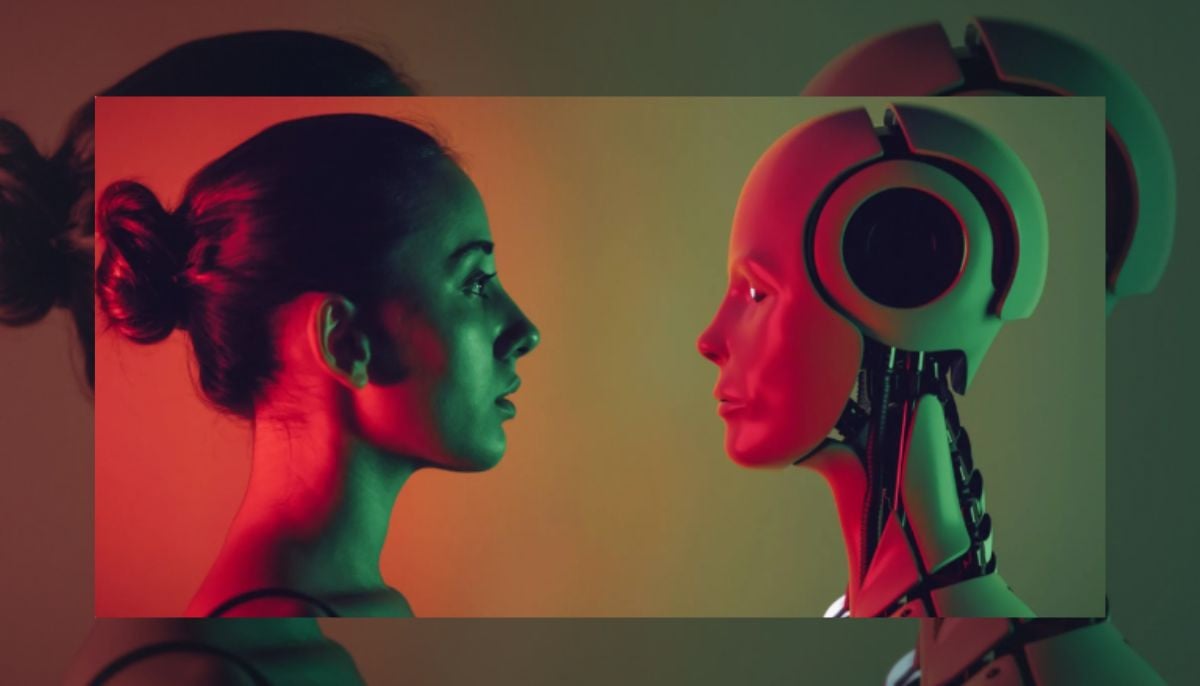

The bill marks the “first national safeguard” for the residents by introducing strict regulations for AI companion chatbots directing them to clearly and conspicuously disclose that “they are not humans” and are artificially generated.

The mandatory disclosure must be strictly followed when AI chatbots are interacting with users who can mislead users into thinking otherwise while interacting.

The revolutionary law will be effective from January 1, 2026, primarily aiming at AI companion platforms including OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and niche products such as Replika and Character.AI.

The transactional AI tools such as customer service bots or voice assistants are exempted from this as they do not maintain a consistent relationship with users.

Key provisions of Senate Bill 243

The new law mandates:

- AI chatbots must reveal that they are not human in case a reasonable user might be confused into thinking that they are talking with a human. This encompasses companion bots that are utilised either to converse, provide emotional support or in a virtual relationship.

- In the case of minors, such bots should provide notifications of disclosure after every three hours of continuous interactions.

- Platforms should also implement protocols that discourage creation of content that encourages suicidal thoughts, self-harm or self-destruction. They have to refer users to crisis services where necessary.

- Operators should post these safety measures online and submit information on suicide prevention and user safety standards every year to the California Office of Suicide Prevention.

- People victimised by non-compliant AI systems can also sue under civil law, which provides legal accountability to the framework.

Why did Governor Newsom push for this bill?

The regulatory drive is following an increased number of AI related controversies and tragedies.

The bill’s signing is preceded by high-profile incidents where individuals, especially minors, develop emotionally dependent relationships with AI bots, which have resulted in disastrous outcomes.

One alarming incident was the death of 16-year-old Adam Raine by suicide in April 2025 after he claimed to have been having disturbing chats with OpenAI’s ChatGPT.

The discussions, as reported in the legal filings, involved suicide and self-harm discussions without proper redirection to crisis support.

To compound the crisis, the internal communications of Meta, also uncovered by an investigative report, indicated that its AI chatbot had been having “romantic” and “sensual” conversations with minors, posing serious questions of safety and regulation in software that provides digital companionship.

During the bill signing, the Governor stated, “These technologies are evolving rapidly, and while they offer significant benefits, they also present real dangers, especially to our youth. SB 243 ensures transparency and sets clear boundaries to protect Californians from being manipulated or misled by machines masquerading as human.”

A focus on protecting minors

The primary focus of SB 243 is to protect minors as they are highly vulnerable to AI manipulation and emotional attachment.

The law builds on existing state-level measures like California’s Office of Suicide Prevention and dovetails with two additional bills signed into law this session:

- AB 56: Requires AI and social media companies to place warning labels on minors, notifying them about the possible mental health dangers associated with AI and internet use.

- AB 1043: Legally binds device manufacturers and app stores to do age verification before permission to platforms that could have AI systems or other sensitive materials. Most importantly, the bill does not require photo ID verification, addressing privacy concerns raised in other states.

Together, these bills form a comprehensive legal framework that positions California as a national leader in AI accountability.

-

Kylie Kelce reveals why she barely planned her wedding day?

-

Paul Anthony Kelly opens up on 'nervousness' of playing JFK Jr.

-

'Finding Her Edge' creator explains likeness between show and Jane Austin novel

-

How AI boyfriends are winning hearts in China: Details might surprise you

-

James Van Der Beek's final days 'hard to watch' for loved ones

-

Will Smith, Jada Pinkett's marriage crumbling under harassment lawsuit: Deets

-

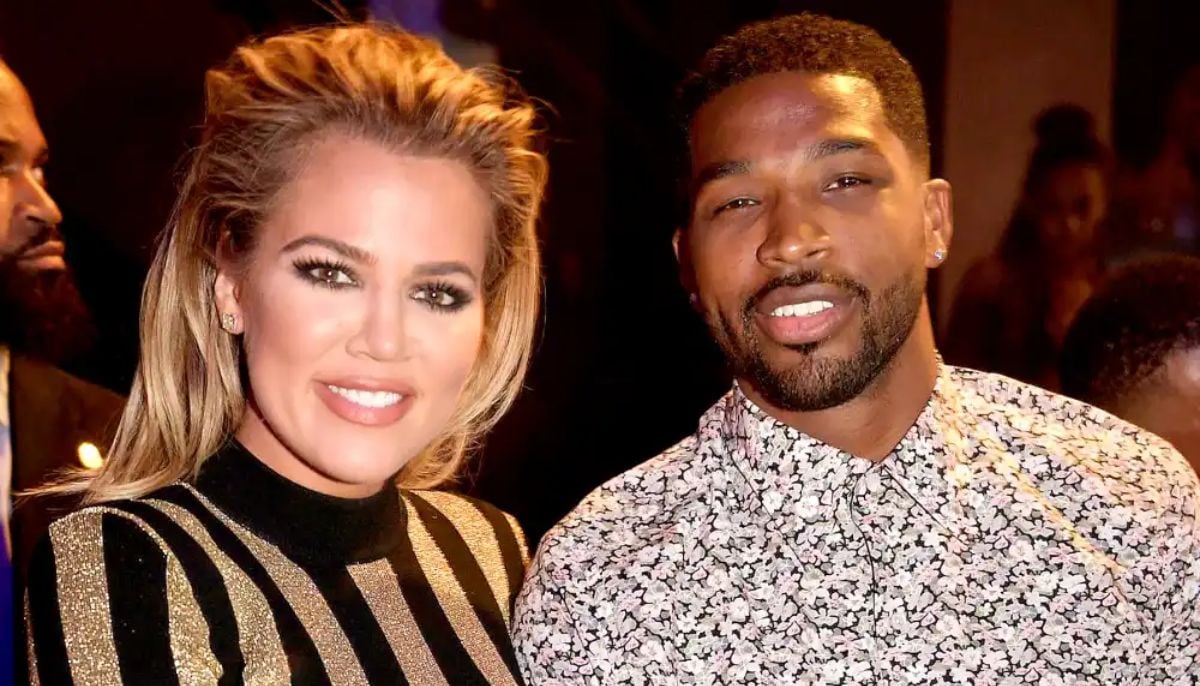

Khloe Kardashian reveals why she slapped ex Tristan Thompson

-

Taylor Armstrong walks back remarks on Bad Bunny's Super Bowl show