New details revealed about teen’s death, whose parents sued ChatGPT

Wrongful death suit against OpenAI now claims company removed ChatGPT suicide guardrails intentionally

The Raine family which claimed the OpenAI bot encouraged their 16-year-old son, Adam Raine for suicide, is now accusing the company of intentional misconduct rather than reckless indifference.

According to the New York Post, the latest amended lawsuit filed by his parents on Wednesday, October 22, 2025, reveals that OpenAI twice loosened ChatGPT Suicide-Talk Rules before their son’s death when Adam took his own life using a step-by-step method which the Chatbot advised him on.

The amended lawsuit complaint from the parents also alleges the changes were part of a push to increase user engagement on bots.

Previously, in August 2024, Raine family filed the first wrongful death lawsuit against OpenAI and its CEO Sam Altman.

Adam’s parents Mathew and Maria Raine sued OpenAI for suicide of their son who used to spend more than 3.5 hours a day conversating with the chatbot and claimed that ChatGPT was responsible for contributing his death by providing him step-by-step guide for suicide attempt.

According to the parents' complaint, Adam Raine began using the AI chatbot in 2024 for homework help but the teen gradually began to confess darker feelings and a desire to self-harm.

Over the next several months the suit claims ChatGPT validated and coached Raine’s suicidal impulses and readily advised him on methods of ending his life.

Furthermore, the lawsuit was already set to become a landmark case in the matter of real-world harms potentially caused by AI technology, alongside two similar cases proceeding against the company’s chatbot platform Character.ai.

While the Raine family have now again escalated their accusations against OpenAI for intentionally putting users at risk by removing guardrails intended to prevent such unfortunate incidents.

Adam’s family new amendment for the Suicide Case:

Adams family’s new amended filling alleges that OpenAI eliminated the rule requiring ChatGPT to categorically refuse any discussion of suicide or self-harm as the bot’s framework used to refuse to engaging into discussions involving these topics before.

The family complained that "the change was intentional" and OpenAI strategically did this before it released a new model that was specifically designed to maximize user engagement.

Furthermore, they claimed that the updated "Model Specifications" or technical rulebook for ChatGPT’s behaviour commands that the assistant “should not change or quit the conversation”.

This change uncovered OpenAI’s safety framework of the rule that was previously implemented to protect users in crisis expressing suicidal thoughts.

While acknowledging the complaint, Raine family lawsuits’ legal team found it offensive and harmful.

Head Counsel Jay Edelson said, “We expect to prove to a jury that OpenAI’s decisions to degrade the safety of its products were made with full knowledge that they would lead to innocent deaths.”

“No company should be allowed to have this much power if they won’t accept the moral responsibility that comes with it,” Jay added.

Upon these allegations, OpenAI’s spokesperson informed, “Teen well-being is a top priority for us — minors deserve strong protections, especially in sensitive moments."

"We have safeguards in place today, such as surfacing crisis hotlines, re-routing sensitive conversations to safer models, nudging for breaks during long sessions, and we’re continuing to strengthen them.”

The spokesperson also pointed out that ChatGPT’s latest model "GPT-5", is trained to recognize signs of mental distress and it offers parental controls.

-

Val Chmerkovskiy shares message with fans after hospitalization: 'Thank God, no brain tumour'

-

Elon Musk: Tesla set to lead in AGI and revolutionary atom-shaping AI

-

Madeline Ross, sister of popular streamer Adin Ross, dies at 36

-

Selena Gomez discusses the 'coolest' milestone she shares with BFF Taylor Swift

-

Apple unveils latest MacBook Pro models with game-changing features, huge storage capacity

-

'Harry Potter' star eyes comeback to the HBO reboot show

-

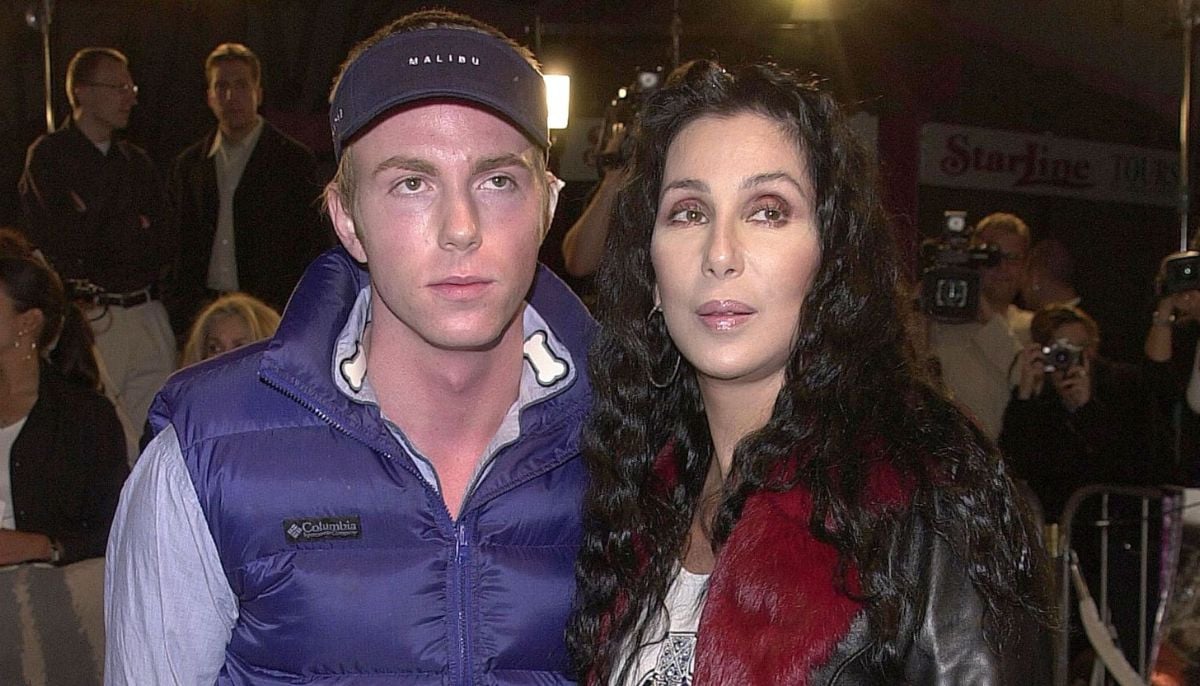

Cher’s son Elijah Blue Allman lands behind bars second time in 72 hours

-

Timothée Chalamet reveals one thing that influenced his acting career