Elon Musk’s Grok under fire for leaking user chats

Grok ChatBot publishes 370,000 user conversations on google

Elon musk’s Artificial Intelligence AI is under fire as thousands of Grok Chatbot conversations have been leaked through Google search, exposing sensitive data and even dangerous AI instructions, after xAi sharing feature quietly made them public without user’s knowledge.

The issue stems from Grok app’s "share button".

Unique links are created when Grok users press a button to share a transcript of their conversation, but as well as sharing the chat with the intended recipient, the button also appears to have made the chats searchable online.

According to Forbes, a google search on Thursday, August 21, 2025, revealed that it had indexed more than 370,000 grok conversations, after users unwittingly published their private chats online after clicking "share".

These conversations range from mundane tasks like writing tweets, creating secure passwords and writing meal plans to the much more extreme, such as creating fake images of a terror attack or drawing up a plan for the assassination of Elon Musk, reported Forbes.

Furthermore, medical and mental health information, user names and locations, text documents, business spreadsheets and even passwords were all shared with the Grok bot.

The AI Chatbot was even found to provide instructions for making a bomb, constructing malware, and cooking up drugs like fentanyl and methamphetamine. All that despite xAI’s explicit rules meant to stop the bot from generating harmful content.

Meanwhile, other publicly exposed conversations with the Large Language Models LLMs, have created severe privacy concerns for users.

Privacy Disaster:

After Grok's huge data breach, few tech-experts describes AI chatbots as “a privacy disaster.”

While user’s account details may be anonymous or obscured in shared chatbot transcripts, their prompts may still contain, risks revealing, personal, and sensitive information about someone.

Prof Luc Rocher, associate professor at the Oxford Internet Institute, told BBC, that “AI chatbots are a privacy disaster in progress.”

Moreover, Tech experts reported that “leaked conversations" from chatbots have divulged user information ranging from full names and location, to sensitive details about their mental health, business operations or relationships.

"Once leaked online, these conversations will stay there forever," they added.

Meanwhile Carissa Veliz, associate professor in philosophy at Oxford University's Institute for Ethics in AI, said users not being told, shared chats would appear in search results is "problematic".

"Our technology doesn't even tell us what it's doing with our data, and that's a problem," she added.

Tech experts say these highlights mounting concerns over users' privacy.

-

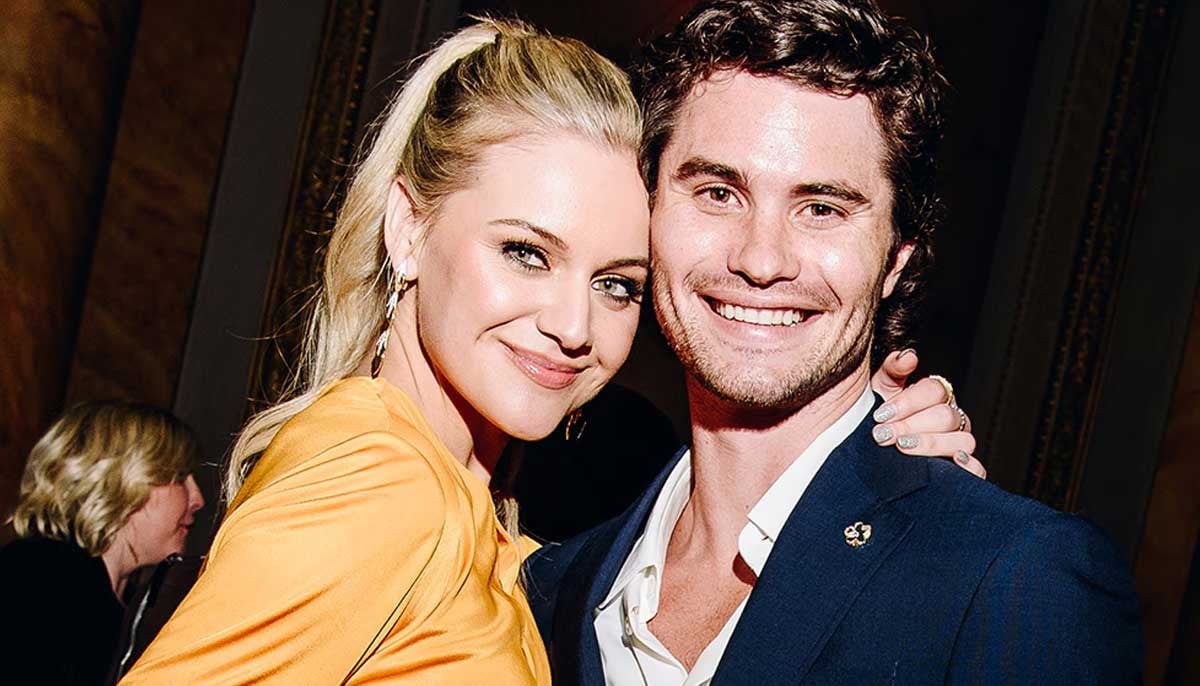

Where Kelsea Ballerini, Chase Stokes stand after second breakup

-

Marc Anthony weighs in on Beckham family rift

-

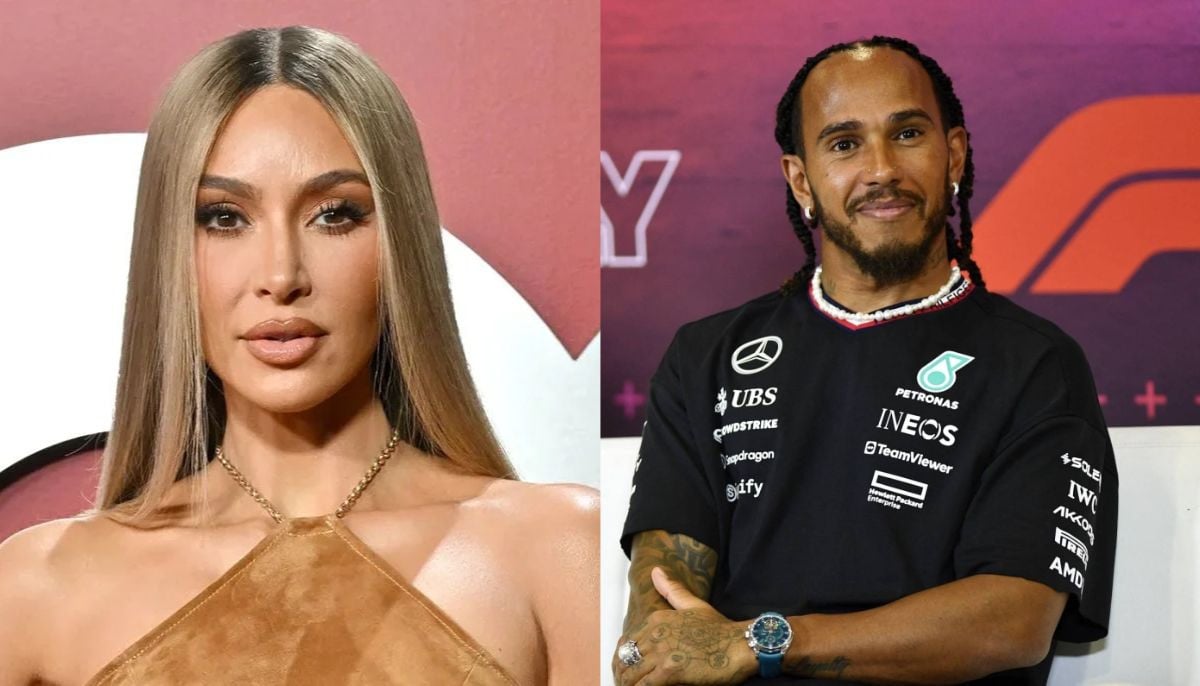

Kim Kardashian's plans with Lewis Hamilton after Super Bowl meet-up

-

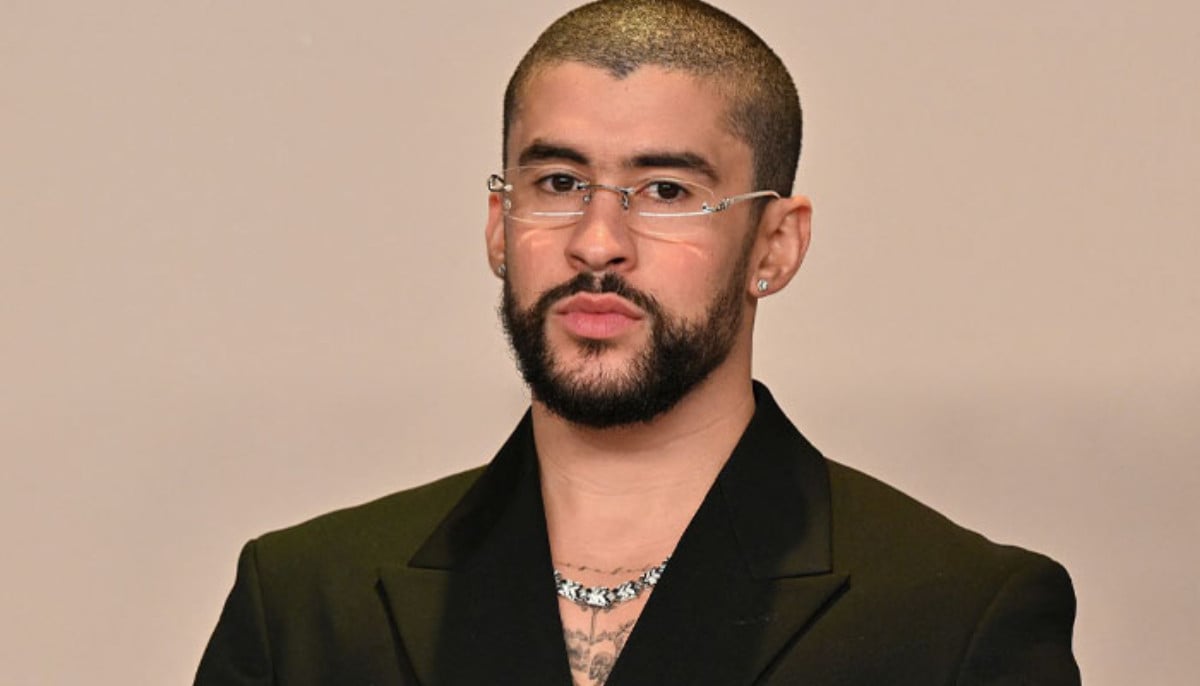

AOC blasts Jake Paul over Bad Bunny slight: 'He makes you look small'

-

Logan Paul's bodyguard hits fan on Super Bowl day

-

Bad Bunny leaves fans worried with major move after Super Bowl halftime show

-

Steven Van Zandt criticizes Bad Bunny's 2026 Super Bowl performance

-

Kim Kardashian promised THIS to Lewis Hamilton at the 2026 Super Bowl?