Amazon new AI cuts response time by 30% by mimicking brain

The study focuses on the speech domain but can be extended to foundation models of other fields such as NLP and computer vision

In a breakthrough research, Amazon researchers have developed an Artificial intelligence (AI) architecture that accelerates the response time by utilising only the necessary part of the system. This phenomenon replicates the working of a human brain as it uses specific regions for different tasks.

Currently, the biggest challenge faced in the development of AI models is the inefficiency of running entire neural networks for every request, even when most of the processing isn’t needed. This innovation offers a solution to this major challenge by only activating relevant neurons.

How it works

The traditional deployment of large language models (LLMs) and foundational AI systems squander computational power by activating all neurons for every task.

While this guarantees versatility, it results in significant inefficiency, much of the network’s activity is superfluous for any given prompt. The study titled “Context-aware dynamic pruning for speech foundation models” is inspired by the brain’s efficiency and dynamically prunes (skips) irrelevant neurons in real time.

A light weight “gate predictor” assesses the inputs like language, task type (e.g. translation or speech recognition), and context then creates a “mask” to turn off unnecessary modules. This makes sure that only necessary parts of the models are active that will ultimately save processing time without sacrificing accuracy.

Key Benefits

The notable advantage of such types of AI modules will be higher efficiency. Tests showed a 34% reduction in inference time for speech-to-text tasks, cutting latency from 9.28 seconds to just 5.22 seconds.

It also offers lower costs. By skipping approximately 60% of unnecessary computations, the system brings down cloud and hardware expenses making it comparatively inexpensive.

While it gives faster responses, there’s no quality loss. Performance metrics like translation accuracy (BLEU score) and speech recognition (Word Error Rate) remain strong even with moderate pruning.

The new AI module is also highly adaptable. The system adjusts pruning based on the task prioritizing local context for speech recognition while keeping more modules active for translation.

Broader implications

By following this approach, there will be more energy-efficient AI which is crucial as models grow larger. Such a system will also provide personalized AI that optimizes processing on the basis of user needs or device capabilities.

These AI modules have applications beyond speech AI including language processing and computer vision. With the help of faster and cheaper AI, Amazon’s innovation could help deploy advanced modes more widely while reducing environmental and operational costs.

-

Nancy Guthrie’s kidnapper will be caught soon: Here’s why

-

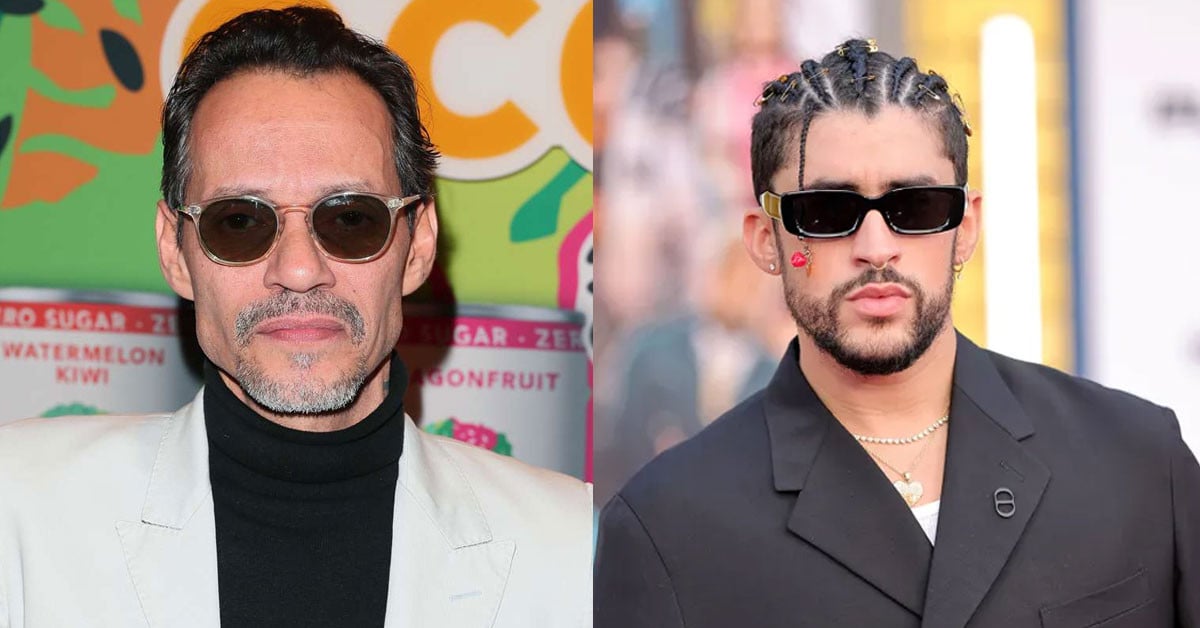

Internet splits over New York's toilet data amid Bad Bunny's Super Bowl show

-

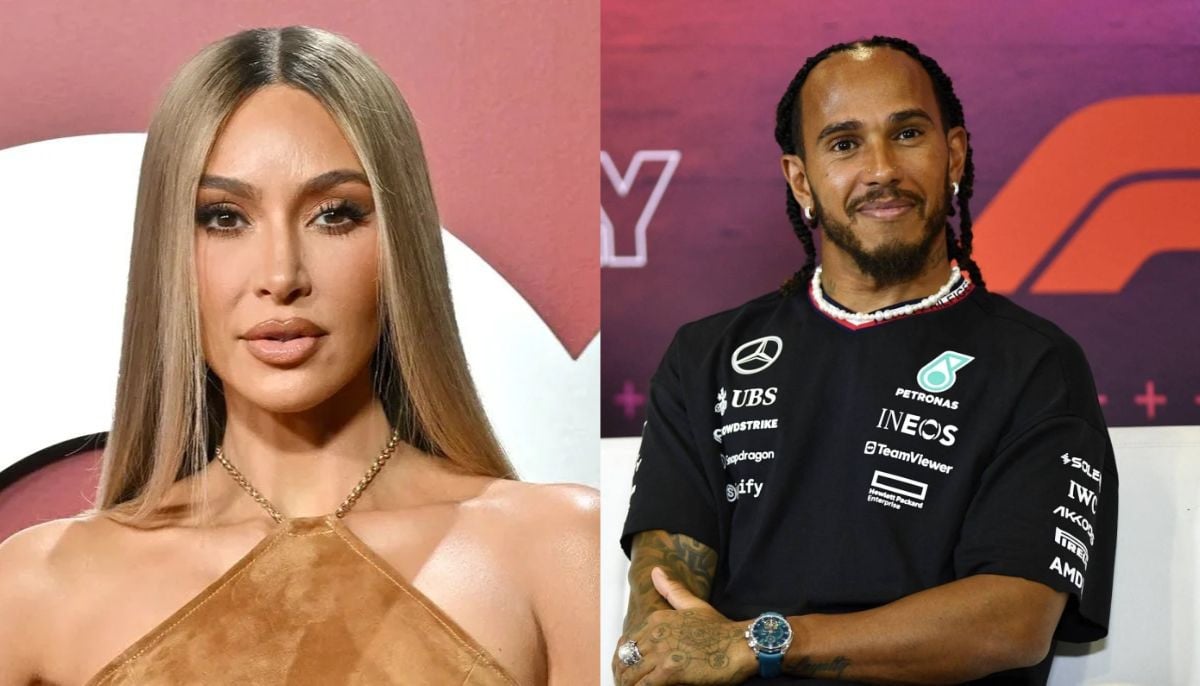

Lewis Hamilton spent years trying to catch Kim Kardashian's attention?

-

Ferrari Luce: First electric sports car unveiled with Enzo V12 revival

-

Jon Stewart on Bad Bunny's Super Bowl performance: 'Killed it''

-

Catherine O’Hara’s cause of death finally revealed

-

Swimmers gather at Argentina’s Mar Chiquita for world record attempt

-

Elon Musk unveils SpaceX plan for civilian Moon, Mars trips