One simple brain function can improve AI performance, study claims

Researchers find how brain-inspired AI could cut energy use and boost performance

In the latest technological research, a new study finds clues on how researchers can improve Artificial Intelligence AI performance through human brain function.

A new approach developed at the University of Surrey in Guildford, England, takes direct inspiration from biological neural networks of the human brain.

According to a study published in Neurocomputing, mimicking the brain's neural wiring can significantly improve the performance of artificial neural networks ANNs used in generative AI and other modern AI models, such as ChatGPT.

The study explains that Topographical Sparse Mapping (TSM) connects each neuron similarly to how the human brain organises information efficiently.

Unlike conventional deep-learning models, which connect every neuron in one layer to all neurons in the next, wasting energy, TSM connects each neuron only to nearby or related ones.

Researchers from Nature-Inspired Computation and Engineering (NICE) said the new model eliminated the need for vast numbers of unnecessary connections, improving performance more sustainably without sacrificing accuracy.

Senior Lecturer in Computational Biology at the University of Surrey, Dr Roman Bauer, said, "Our work shows that intelligent systems can be built far more efficiently, cutting energy demands without sacrificing performance."

"Training many of today's popular large AI models can consume over a million kilowatt-hours of electricity, which simply isn't sustainable at the rate AI continues to grow." Dr Bauer added.

An enhanced version called Enhanced Topographical Sparse Mapping (ETSM) goes a step further by introducing a biologically inspired "pruning" process during training.

Researchers inform that the process is similar to how the brain gradually refines its neural connections as it learns.

Moreover, Surrey's new enhanced model achieved up to 99% sparsity, meaning it could remove almost all the usual neural connections – yet still matched the accuracy of standard networks on benchmark datasets.

Because it avoids the constant fine-tuning and rewiring used by other approaches, it trains faster, uses less memory and consumes less than 1% of the energy of a conventional AI system.

In addition, the research team is exploring how the approach could be used in other applications, such as more realistic neuromorphic computers—a computing approach inspired by the human brain's structure and function.

-

Noah Wyle gives exciting update about 'The Pitt' season 3

-

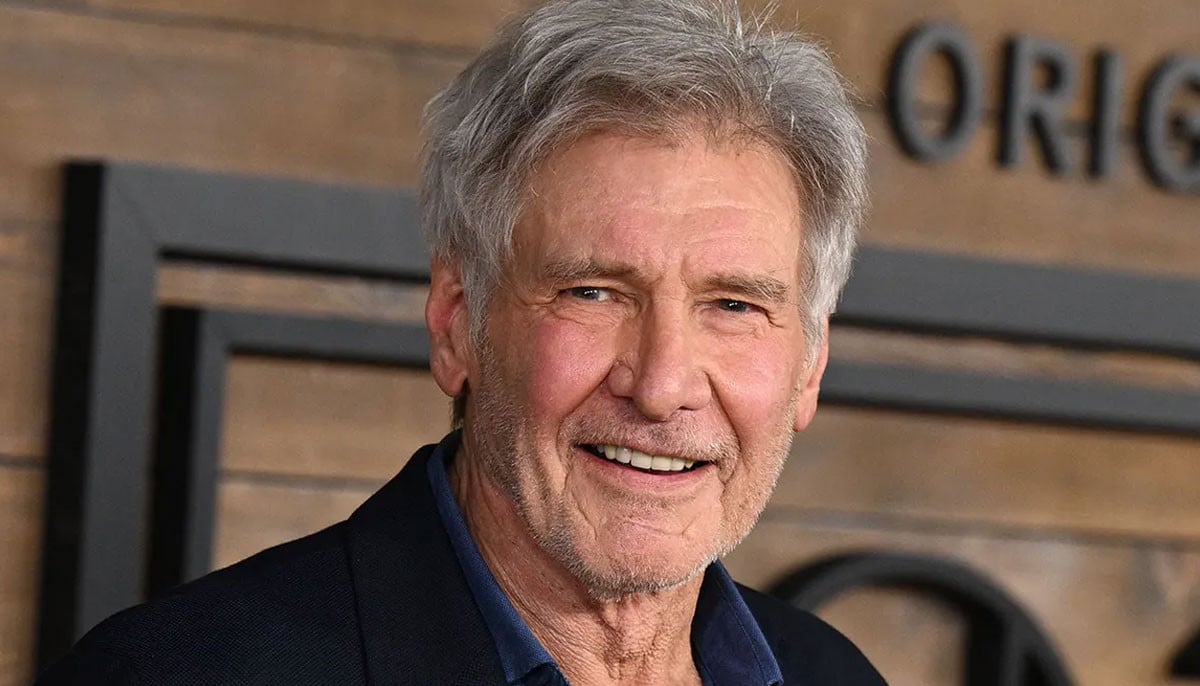

Woody Harrelson unveils the favour ‘living legend’ Harrison Ford asked for before Actor Awards

-

Seth Rogen unveils Catherine O’Hara's distinguished quality in emotional tribute

-

Michael J. Fox stuns Actor Awards audience with rare confession amid Parkinson's disease

-

Jessie Buckley utters 'wild' remarks for 'Hamnet' co-star Emily Watson at Actor Awards

-

Michael B. Jordan makes bombshell confession at Actor Awards after BAFTA controversy: 'Unbelievable'

-

Harrison Ford reflects on career as he receives Life Achievement Award at 2026 Actor Awards

-

SAG Actor Awards 2026 winners: Complete list