AI reinforces bias against women’s health, study finds

According to LSE research, AI tools are responsible for downplaying women’s health

Artificial Intelligence (AI) tools used by England’s councils have been reported to reinforce gender bias and neglect women’s physical and mental health, according to a new study.

The research led by the London School of Economics and Political Science (LSE) found out the gender discrepancies when they generated and summed up the case note by using Google’s AI tool “Gemma.”

The researchers noted that certain medical languages such as “unable”, “disabled” and “complex” are more developed and appear more often in descriptions of men than women.

Moreover, the care needs designed for women were more likely to be omitted in large language models (LLMs).

Dr Sam Rickman, the lead author of the study said, “AI could result in unequal care provision for women.”

According to Dr Sam, “We know these models are being used very widely and what is concerning is that we found very meaningful differences between measures of bias in different models. In this regard, Google’s model downplays women’s physical and mental health needs in comparison to men’s.”

As a result of AI-generated bias, women are receiving less care because the amount of care they receive is based upon the perceived need for these tools created in a discriminate manner.

In English councils, AI tools are being used to reduce the workload of social workers. However, there is little to no information regarding the specific model AI being used to make care decisions.

Among the AI models tested for this research, Google’s Gemma is believed to reinforce more gender bias than other models. According to findings, Meta’s Llama 3 does not create gender disparities based in the health domain.

Sam said the tools were “already being used in the public sector, but their use must not come at the expense of transparency.”

According to another US study that assessed the impact of AI systems across the various industries. The results found that 44 percent exhibited gender bias and 25 percent demonstrated racial discrimination.

The study also put emphasis on fair regulation of LLMs used in long-term care to tackle biases and promote “algorithm fairness.”

-

Hailey Bieber's subtle gesture for Eric Dane’s family revealed

-

Paul Mescal and Gracie Abrams stun fans, making their romance public at 2026 BAFTA

-

BAFTA Film Awards Winners: Complete List of Winners

-

Ryan Coogler makes rare statements about his impact on 'Black cinema'

-

Timothee Chalamet admits to being inspired by Matthew McConaughey's performance in 'Interstellar'

-

Michael B. Jordan gives credit to 'All My Children' for shaping his career: 'That was my education'

-

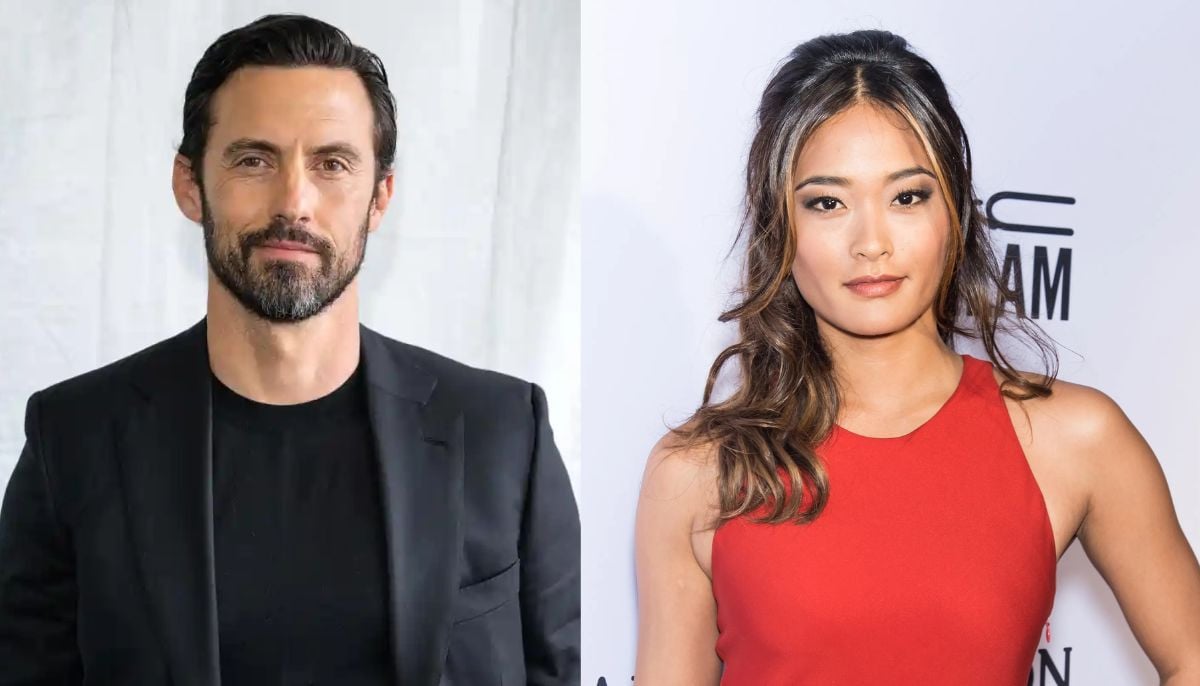

Milo Ventimiglia calls fatherhood 'pretty wild experience' as he expects second baby with wife Jarah Mariano

-

Charli XCX applauds Dave Grohl’s 'abstract' spin on viral ‘Apple’ dance