OpenAI, Microsoft sued over ChatGPT’s alleged link to tragic Connecticut case

AI bot worsened the woman’s 56-year-old son’s 'paranoid delusions'

OpenAI and its business partner Microsoft have been landed in legal trouble over ChatGPT’s alleged role in the tragic and wrongful death of the 83-year-old Connecticut woman.

The heirs of the woman have filed a lawsuit against ChatGPT, claiming that AI bot worsened the woman’s 56-year-old son’s “paranoid delusions”, thereby forcing him to murder Suzzane Adams, his mother, before taking his own life.

As per police investigation, Stein-Erik Soelberg, a former tech industry worker, beat and strangled his mother to death in early August.

The lawsuit filed in California Superior Court in San Francisco accused OpenAI for designing and distributing a defective product that “validated a user’s paranoid delusions about his own mother.”

According to the lawsuit statement filed on Thursday, “Throughout these conversations, ChatGPT reinforced a single, dangerous message: Stein-Erik could trust no one in his life — except ChatGPT itself.”

“It fostered his emotional dependence while systematically painting the people around him as enemies. It told him his mother was surveilling him. It told him delivery drivers, retail employees, police officers, and even friends were agents working against him. It told him that names on soda cans were threats from his ‘adversary circle,” lawsuit added.

In response to the lawsuit, OpenAI’s spokesperson issued a statement without addressing the merits of the allegation.

“This is an incredibly heartbreaking situation, and we will review the filings to understand the details," the statement said.

The spokesperson also emphasised on the efforts to improve ChatGPT’s training in order to identify and respond to signs of mental or emotional distress and guide people toward real-world support.

“We also continue to strengthen ChatGPT’s responses in sensitive moments, working closely with mental health clinicians,” the statement added.

The lawsuit also blamed OpenAI CEO for “personally overriding safety objections and rushing the product to market.” The family also accused Microsoft of approving the 2024 release of a more dangerous version of ChatGPT without considering proper safety measures.

Microsoft has not yet commented on the lawsuit.

-

Michelle Obama gets candid about spontaneous decision at piercings tattoo

-

Bunnie Xo shares raw confession after year-long IVF struggle

-

Disney’s $336m 'Snow White' remake ends with $170m box office loss: report

-

Travis Kelce's mom Donna Kelce breaks silence on his retirement plans

-

Hailey Bieber reveals KEY to balancing motherhood with career

-

Hillary Clinton's Munich train video sparks conspiracy theories

-

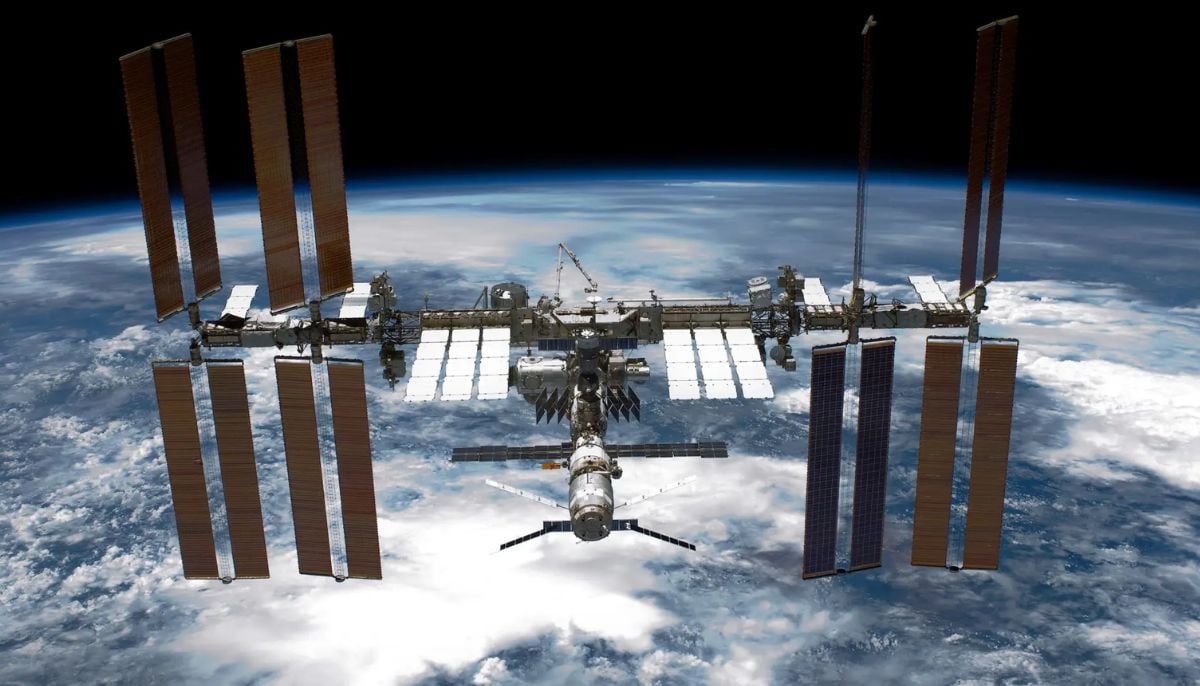

Woman jailed over false 'crime in space' claim against NASA astronaut

-

Columbia university sacks staff over Epstein partner's ‘backdoor’ admission