ChatGPT is not great as you think and here is why

GPT-4 is struggling with simple puzzles but that is because all our text inputs are encoded as numbers for AI to process

ChatGPT, developed by OpenAI, has taken the globe by storm. It has caught everyone by surprise, shocking everyone with its articulation and database. People have been impressed by the chatbot's ability to summarise complex topics or to engage in long conversations.

It's not surprising that rival AI firms have been scrambling to publish their own large language models (LLMs), the term for the system that powers chatbots like ChatGPT. Some of these LLMs will be included in other products, such as search engines.

Michael G. Madden who is Established Professor and Head of School of Computer Science in University of Galway chose to test the chatbot on Wordle, the word game from the New York Times.

A five-letter word can be guessed six times by players. The game shows which letters, if any, are in the right places in the word for each guess.

He found that the performance of the most recent version, known as ChatGPT-4, on these puzzles was unexpectedly subpar.

One might expect AI at the level of GPT-4 to be an expert at word games since LLMs are trained in text. GPT-4 was reportedly trained on about 500 billion words.

Madden first tested GPT-4 on a Wordle puzzle that he knew a little bit. To our disappointment, five out of ChatGPT-4's six responses failed to succeed.

While the chatbot was able to find some solutions, it was mostly a hit-and-miss for the AI.

What ChatGPT-4, according to Madden, is also bad at are palindromes. It gives obscure results when asked to produce any.

It may be surprising that GPT-4 is struggling with simple puzzles but that is actually because all our text inputs are encoded as numbers for AI to process.

Future LLMs can get around this in one of two ways, as per Madden. The training data for ChatGPT-4 might be expanded to include mappings of every letter position inside each word in its vocabulary because it is known that ChatGPT-4 understands the first letter of every phrase.

The second option is more interesting and all-encompassing. Future LLMs could produce code to address issues like these. An approach known as Toolformer, where an LLM employs external tools to complete tasks where they typically struggle, including mathematical operations, was recently presented in a study.

-

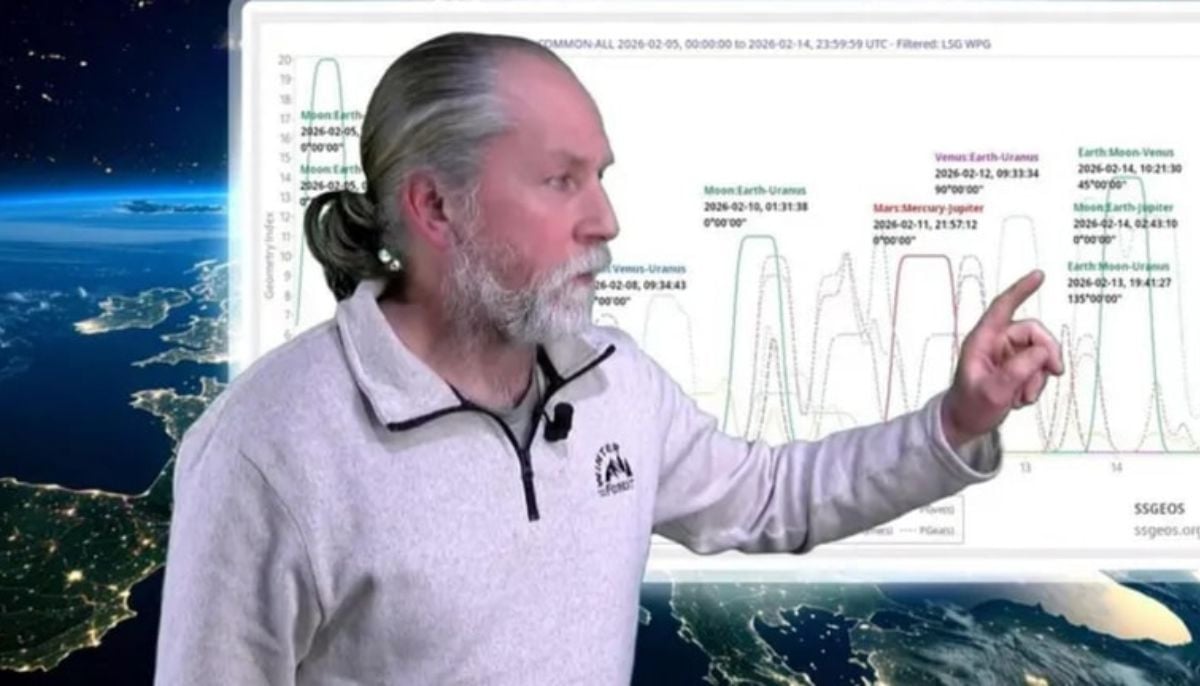

Dutch seismologist hints at 'surprise’ quake in coming days

-

SpaceX cleared for NASA Crew-12 launch after Falcon 9 review

-

Is dark matter real? New theory proposes it could be gravity behaving strangely

-

Shanghai Fusion ‘Artificial Sun’ achieves groundbreaking results with plasma control record

-

Polar vortex ‘exceptional’ disruption: Rare shift signals extreme February winter

-

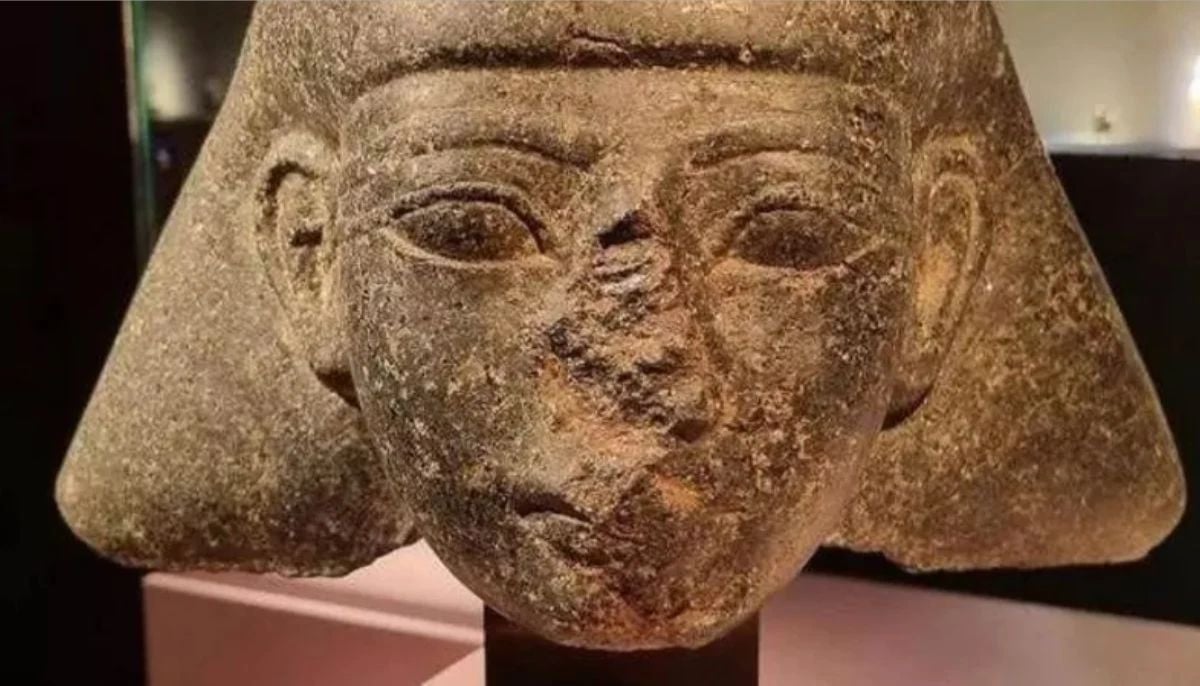

Netherlands repatriates 3500-year-old Egyptian sculpture looted during Arab Spring

-

Archaeologists recreate 3,500-year-old Egyptian perfumes for modern museums

-

Smartphones in orbit? NASA’s Crew-12 and Artemis II missions to use latest mobile tech