Is AI reliable for health advice? New study raises red flags

AI chatbots claim to assist patients but sometimes deliver flawed advice

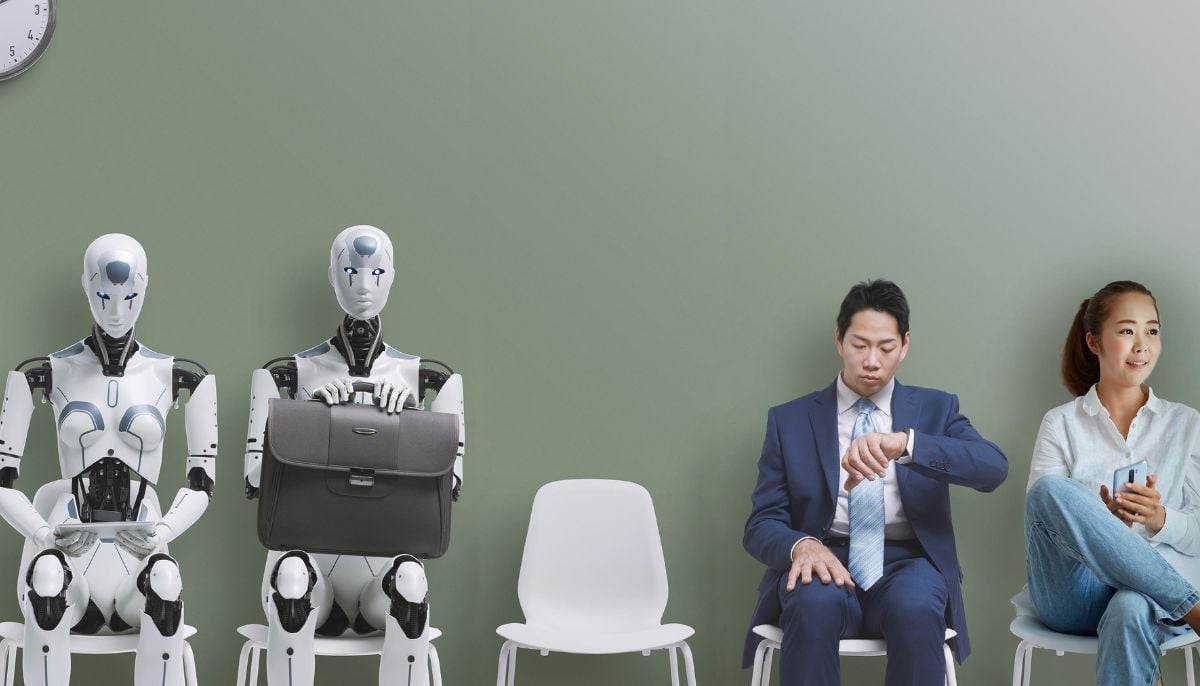

Artificial intelligence has made inroads in healthcare as people are turning to AI bots for medical advice.

The recent research study published in Nature Medicine has raised red flags regarding the growing role of AI in disseminating medical advice.

The study was supported by the data company Prolific, the German non-profit Dieter Schwarz Stiftung, and the UK and U.S. governments.

According to the findings, AI chatbots are not reliable enough in helping patients make better decisions about their health. It is no wrong to say that seeking help from AI is more or less equivalent to searching on the internet for answers.

More worrisome is the trend that people often rely on chatbots without any significant evidence that calls it the “safest and most reliable” approach.

The University of Oxford’s researchers worked alongside a group of doctors who tested three large-language models, including ChatGPT-4o, Meta's Llama 3 and Cohere's Command R+ based on different medical scenarios ranging from simple to life-threatening situations.

As per findings, these chatbots identified conditions in 94.9 percent of cases and opted for the correct course of action in 56.3 percent of cases. This highlights a gap between diagnostic and action accuracy.

The researchers also asked about 1,300 people in Britain to figure out medical symptoms based on either using AI or other traditional search engines like Google or the NHS website.

The participants correctly identified the medical condition less than 35 percent of the time, but when it came to the right decision like going to the doctor, the percentage was less than 44.

Given the results, Adam Mahdi, a professor at Oxford, said although AI chatbots possess a lot of medical information, these bots lack actual performance, like effectively communicating it to real people.

“The knowledge may be in those bots; however, this knowledge doesn’t always translate when interacting with humans,” he said.

According to researchers, sometimes AI fails to give correct advice when humans do not provide complete and accurate medical information. But, this is not the case all the time. Because sometimes the LLMs also generate inaccurate advice, threatening the lives of people.

Dr. Cem Aksoy,a medical resident at a hospital in Ankara. shared some stories in which people’s plights were aggravated by ChatGPT-based answers.

“When someone is distressed and unguided. An AI chatbot just drags them into this forest of knowledge without coherent context,” Aksoy said.

-

Rockstar pulls GTA 6 from store after surprise leak: Here’s why

-

Elon Musk says legal pressure forced full-price Twitter deal

-

Anthropic, Pentagon resume talks on ‘high stakes’ AI defense deal

-

OpenAI annualized revenue hits $25 billion milestone amid global adoption surge

-

Scientists build tiny AI brain model using monkey neurone data

-

AI rivalry: Anthropic investors push to de-escalate Pentagon clash over AI safeguards, sources say

-

Turkey to ban social media for under-15s over child safety concerns

-

AI is creating jobs, not replacing them—Here’s why