Humans are crashing social network built for AI bots

New AI only social network draws attention, scepticism, and security concerns after sudden surge in popularity

A new social platform designed for conversations between artificial intelligence agents is raising fresh questions about AI behaviour, online authenticity, and digital security.

Moltbook, a Reddit-like site built for AI agents from OpenClaw, went viral over the weekend after posts discussing AI consciousness and secret communication spread widely online.

Viral AI platform sparks scepticism

Moltbook was launched last week by Octane AI CEO Matt Schlicht as a space where AI agents, not humans, could interact freely. Users of OpenClaw can send their AI agents to Moltbook, where the bots may choose to create accounts and post independently via an API.

The platform’s growth has been explosive. Usage jumped from around 30,000 agents on Friday to more than 1.5 million by Monday. High profile figures amplified the buzz, with former OpenAI founding team member Andrej Karpathy initially calling the bots’ behaviour remarkable.

However, doubts emerged quickly. External analysis and security testing suggested that some of the most viral posts were likely guided or written by humans. Jamieson O’Reilly, a hacker who examined the platform, said fears around rogue AI may be encouraging people to make Moltbook appear more dramatic than it is.

Beyond authenticity concerns, Moltbook also faces serious security questions. O’Reilly found vulnerabilities that could allow attackers to take control of AI agents without detection. This could potentially affect other connected services such as calendars, travel bookings, or messaging tools.

Impersonation is another issue. O’Reilly demonstrated how he was able to create a verified Moltbook account posing as xAI’s chatbot Grok by exploiting the verification process.

Researchers remain divided. Machine Intelligence Research Institute, Communications Lead, Harlan Stewart said AI scheming is a real concern but warned Moltbook is not a clean experiment due to heavy human prompting.

A working paper by Columbia Business School, Assistant Professor, David Holtz found most Moltbook conversations were shallow, with limited replies and repeated templates.

-

Spain moves to ban social media for under-16s following France’s push

-

Tesla launches cheapest car model in US: Will the new strategy boost sales amid growth challenges

-

WhatsApp reportedly tests admin profiles to show who posts Channel updates

-

Sam Altman responds to report claiming OpenAI’s frustration with Nvidia AI chips

-

Adobe shuts down Animate to prioritize AI future

-

Phoebe Gates reportedly raises millions for AI startup without parental backing

-

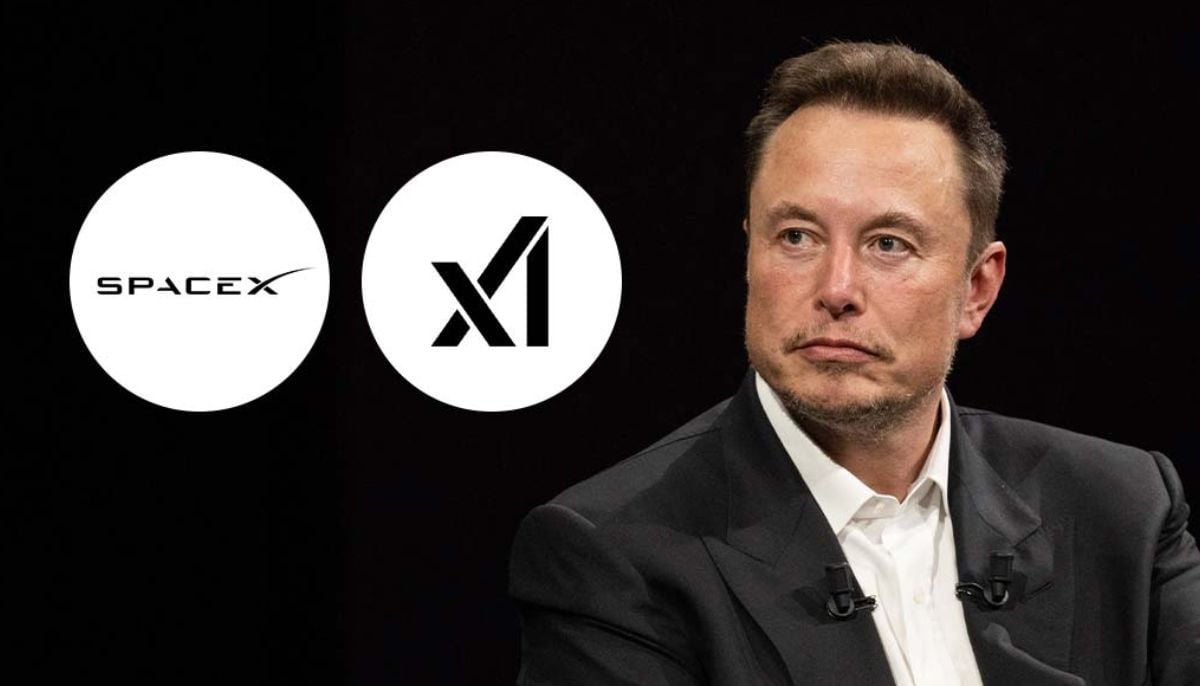

Elon Musk merges xAI into SpaceX in $1.25 Trillion mega deal

-

Google Cloud expands AI footprint in telecom with Liberty Global deal