From ChatGPT to GhostGPT: dark side of generative AI in cybercrime

GhostGPT is an uncensored generative AI chatbot, and is being used by cybercriminals for creating malware and phishing scams

Generative AI, which marks the future of artificial intelligence (AI), is unfortunately becoming an unprecedented threat to cybersecurity. The primary reason for this is the emergence of GhostGPT, a generative AI tool specifically designed for criminal activity.

The malicious chatbot was developed in late 2024 and empowers cybercrime activity by creating malware, crafting phishing emails, BEC scams and more. With its advanced offensive capabilities, even low-skilled criminals can cause bigger cybersecurity threats.

What is GhostGPT?

In simple words, this is a tool built for crime. Unlike mainstream AI models, such as ChatGPT, which are restricted by ethical safeguards, GhostGPT operates without any restrictions. Security analysts consider it either a jailbroken large language model (LLM) or an open-source AI stripped of safety protocols. This enables it to generate:

- Personalized phishing emails that mimic corporate tones and individual writing styles

- Realistic fake login pages to steal credentials

- Polymorphic malware that evolves to evade detection

- Step-by-step guides for attackers to manipulate vulnerabilities

- Doesn’t log interactions making attribution nearly impossible, enabling cybercriminals a dangerous layer of anonymity.

Supercharging phishing and malware

The top cyber threat is phishing with 84% of UK businesses affected by it in 2024 only. GhostGPT aggravates this by producing highly convincing scams in seconds without minimal effort.

In addition, the tools also lower barriers to sophisticated attacks. Previously, it took expertise skills and time to create polymorphic malware. However, novice hackers can now generate malicious code with the help of simple prompts.

A 2023 IBM study confirmed that LLMs are capable of producing functional malware with minimal input.

Future of cybercrime

While GhostGPT poses a severe challenge, its risks can be mitigated by regular patching, multi-factor authentication (MFA), and advanced employee training.

In addition, endpoint detection and response (EDR) and extended detection and response (XDR) can be deployed to identify anomalies. Leveraging threat intelligence can also be utilised for real-time monitoring of emerging attack methods.

-

Is studying medicine useless? Elon Musk’s claim that AI will outperform surgeons sparks debate

-

Travis Kelce takes hilarious jab at Taylor Swift in Valentine’s Day post

-

Will Smith surprises wife Jada Pinkett with unusual gift on Valentine's Day

-

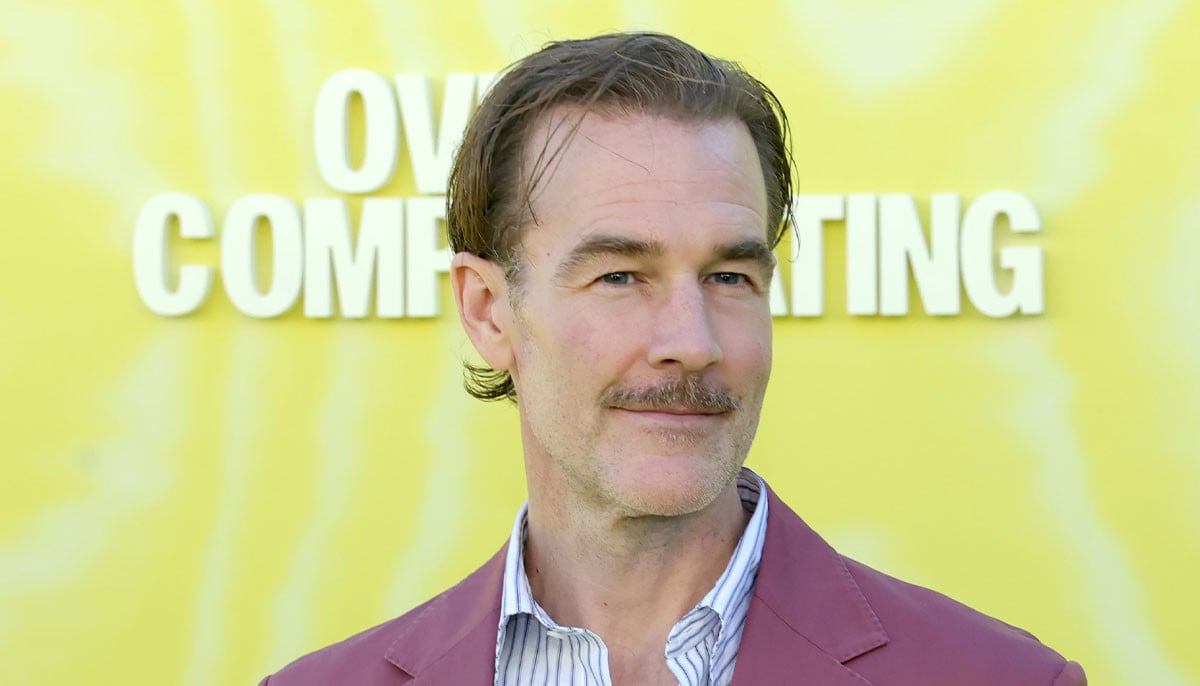

James Van Der Beek's friends helped fund ranch purchase before his death at 48

-

Brooklyn Beckham hits back at Gordon Ramsay with subtle move over remark on his personal life

-

Jennifer Love Hewitt reminisces about workign with Betty White

-

Can Sydney Sweeney's brand compete with Kim Kardashian's SKIMS? Expert reveals

-

Kim Kardashian, Lewis Hamilton's romance being called a calculated move?