Suspect used ChatGPT in Cybertruck blast in Las Vegas

ChatGPT did provide warnings against harmful or illegal activities, claims OpenAI

WASHINGTON: It has been revealed that the suspect involved in the Cybertruck outside the Trump International Hotel in Las Vegas on New Year's Day, used artificial intelligence chatbot ChatGPT to plan the blast, officials told reporters on Tuesday.

The suspect, identified as Matthew Livelsberger, 37, used ChatGPT to try and work out how much explosive was needed to trigger the blast, the officials added.

Authorities last week identified Livelsberger, an active-duty Army soldier from Colorado Springs, and said that he acted alone.

Authorities last week identified the person found dead inside the Cybertruck as Matthew Livelsberger, 37, an active-duty Army soldier from Colorado Springs, and said he acted alone.

The Federal Bureau of Investigation (FBI) says the incident appeared to be a case of suicide.

Why its important?

The Las Vegas Metropolitan Police Department on Tuesday said that the Cybertruck blast was the first incident on US soil where ChatGPT had been used to build an explosive device.

Critics of artificial intelligence have warned it could be harnessed for harmful purposes, and the Las Vegas attack could add to that criticism.

"Of particular note, we also have clear evidence in this case now that the suspect used ChatGPT artificial intelligence to help plan his attack," Sheriff Kevin McMahill of the Las Vegas Metropolitan Police Department told a press conference.

"This is the first incident that I am aware of on US soil where ChatGPT is utilised to help an individual build a particular device," McMahill added.

The FBI says there was no definitive link between a truck attack in New Orleans that killed more than a dozen people and the Cybertruck explosion, which left seven with minor injuries.

They added the suspect had no animosity towards US President-elect Donald Trump and probably had post-traumatic stress disorder.

Livelsberger's phone had a six-page manifesto that authorities were investigating, police said.

OpenAI's stance

ChatGPT maker OpenAI said the company was "committed to seeing AI tools used responsibly" and that its "models are designed to refuse harmful instructions."

"In this case, ChatGPT responded with information already publicly available on the internet and provided warnings against harmful or illegal activities," the company said in a statement cited by Axios.

-

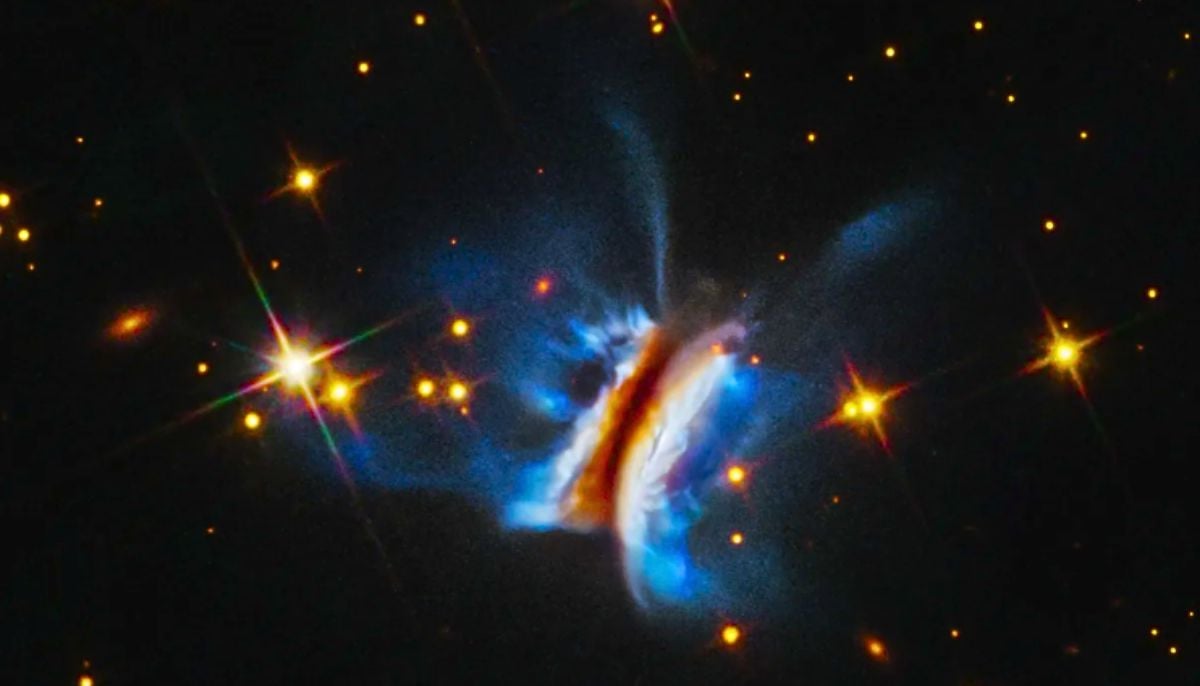

NASA's Hubble Space Telescope discovers ‘Dracula Disk', 40 times bigger than solar system

-

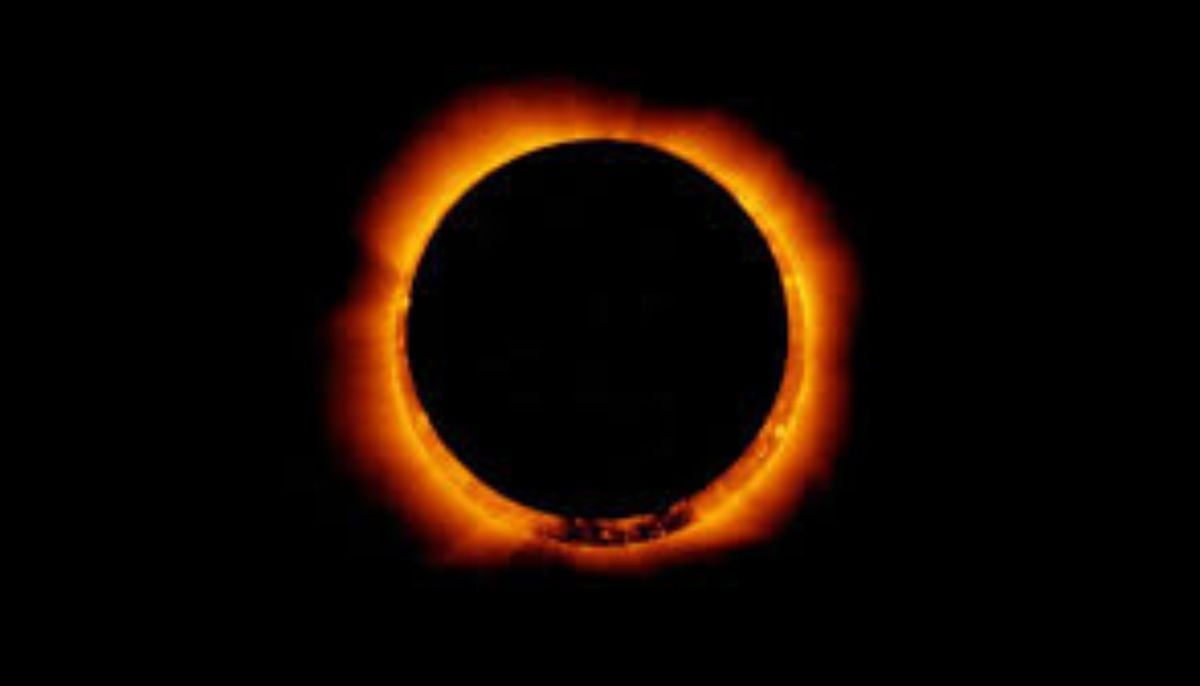

Annular solar eclipse 2026: Where and how to watch ‘ring of fire’

-

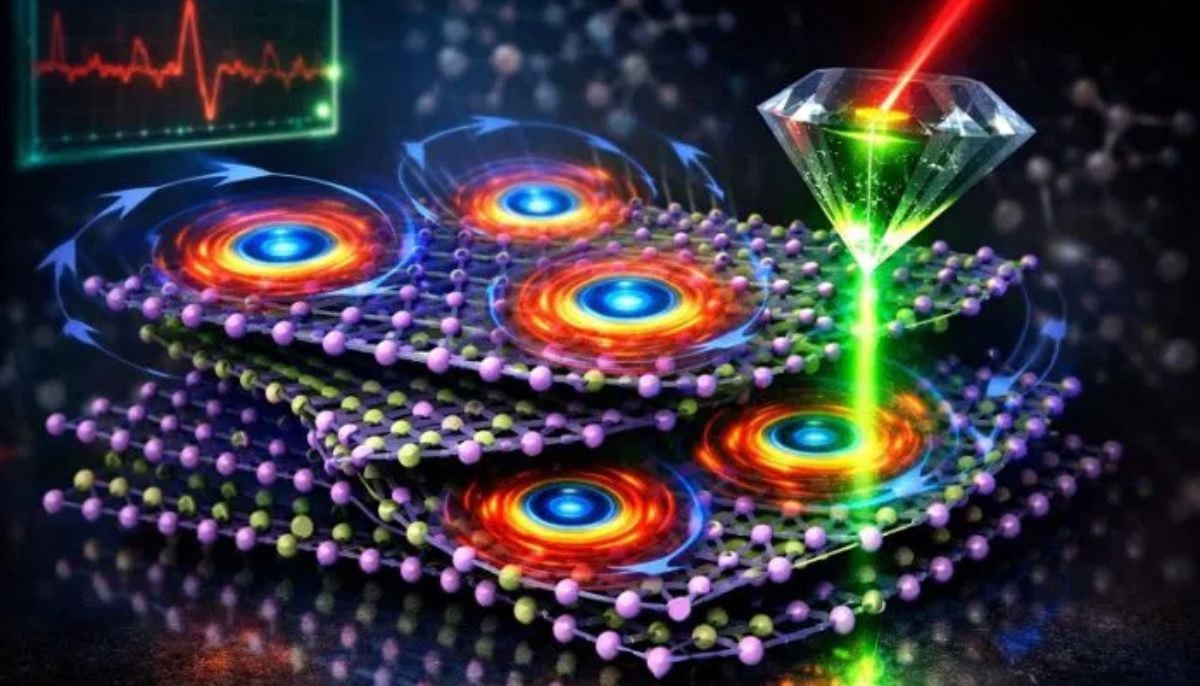

Scientists discover rare form of 'magnets' that might surprise you

-

Humans may have 33 senses, not 5: New study challenges long-held science

-

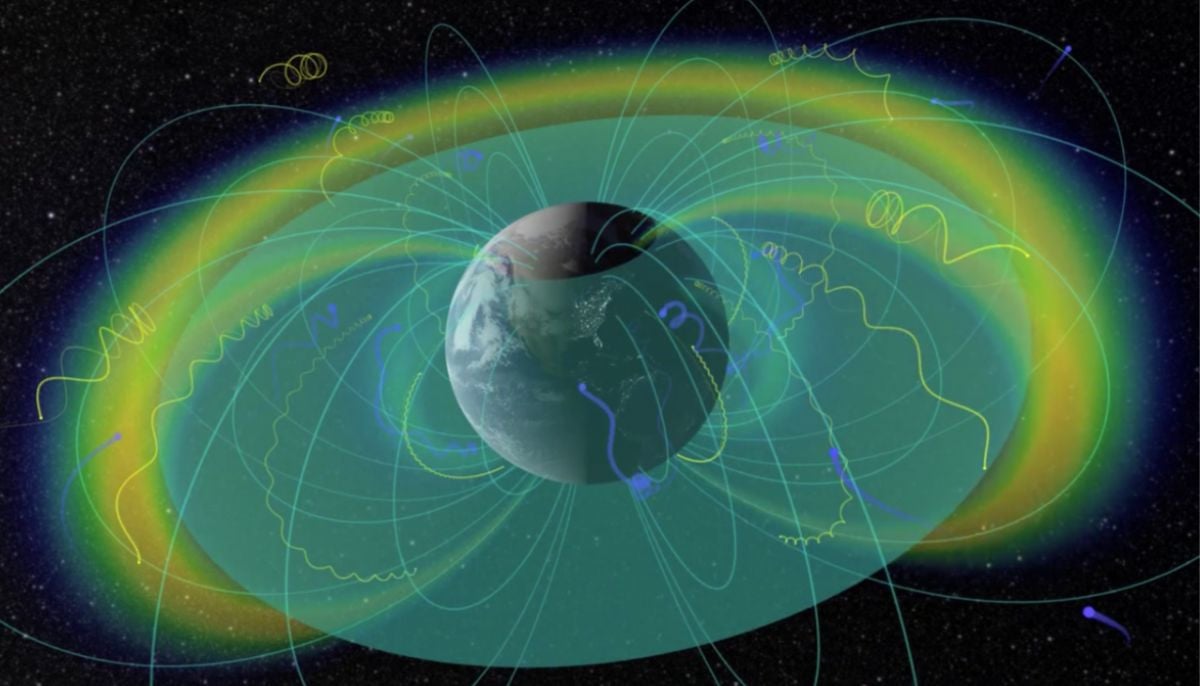

Northern Lights: Calm conditions persist amid low space weather activity

-

SpaceX pivots from Mars plans to prioritize 2027 Moon landing

-

Dutch seismologist hints at 'surprise’ quake in coming days

-

SpaceX cleared for NASA Crew-12 launch after Falcon 9 review