OpenAI leaders ask for regulation of "superintelligent" AI to protect humanity

The leaders suggest other companies working with AI to co-ordinate together to sustain potential risks until a regulatory body is established

OpenAI leaders Wednesday posted a brief note urging for the formation of an international regulator to monitor the use of "superintelligent" AI in order to prevent it from potentially destroying humanity, Guardian reported.

Researchers have already warned of the dangerous risks that AI poses, leading the creators of ChatGPT to call for a regulatory body as big as the International Atomic Energy Agency to contain its risks.

The note posted on the company's website, by the OpenAI co-founders Greg Brockman and Ilya Sutskever and the chief executive, Sam Altman, requested for an international regulator to begin working on how to “inspect systems, require audits, test for compliance with safety standards, [and] place restrictions on degrees of deployment and levels of security." so that the "existential risk" that the technology poses can be sustained.

“It’s conceivable that within the next 10 years, AI systems will exceed expert skill level in most domains, and carry out as much productive activity as one of today’s largest corporations,” they write.

“In terms of both potential upsides and downsides, superintelligence will be more powerful than other technologies humanity has had to contend with in the past. We can have a dramatically more prosperous future; but we have to manage risk to get there. Given the possibility of existential risk, we can’t just be reactive.” they continued.

In the meanwhile, unless a regulatory body is established, the OpenaAI leaders hope for "some degree of co-ordination" among companies working with AI to prioritise safety and care in their projects.

Last week, Altman also expressed worry regarding AI-powered chatbots like ChatGPT saying that they were " a significant area of concern during a congressional hearing in the US Senate.

He also said the technology "required rules and guidelines to prevent misuse." However, he suggested that "introducing licensing and testing requirements for the development of AI" could prevent potential harm from the technology.

-

Shanghai Fusion ‘Artificial Sun’ achieves groundbreaking results with plasma control record

-

Polar vortex ‘exceptional’ disruption: Rare shift signals extreme February winter

-

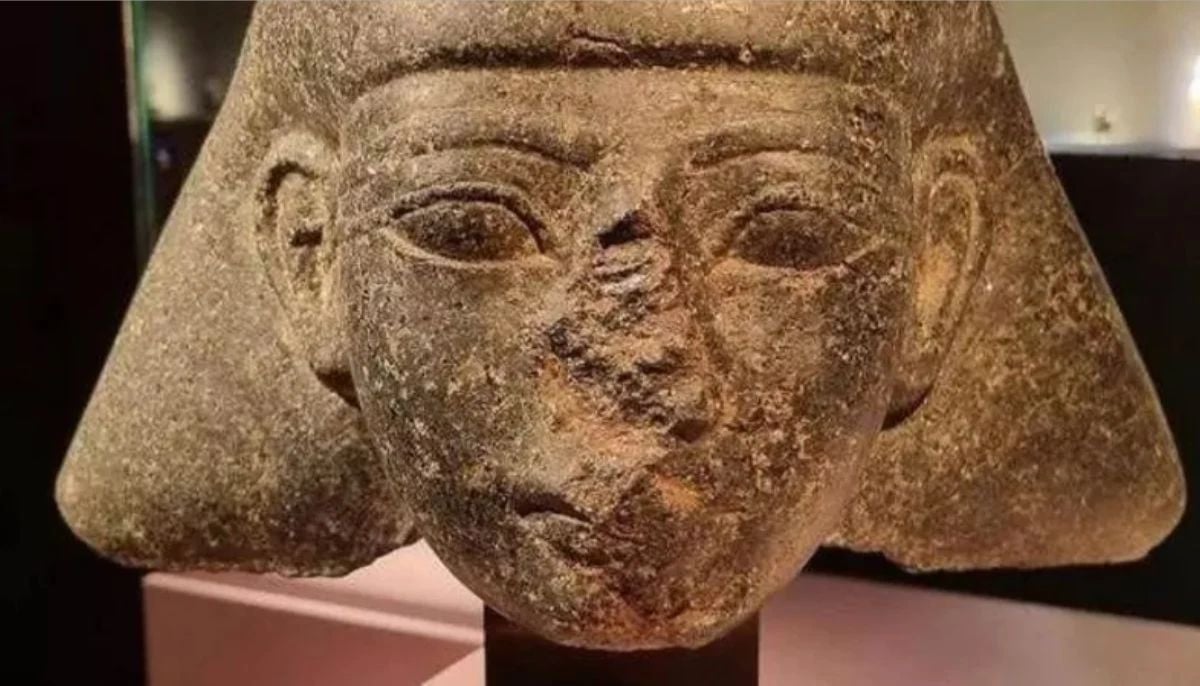

Netherlands repatriates 3500-year-old Egyptian sculpture looted during Arab Spring

-

Archaeologists recreate 3,500-year-old Egyptian perfumes for modern museums

-

Smartphones in orbit? NASA’s Crew-12 and Artemis II missions to use latest mobile tech

-

Rare deep-sea discovery: ‘School bus-size’ phantom jellyfish spotted in Argentina

-

NASA eyes March moon mission launch following test run setbacks

-

February offers 8 must-see sky events including rare eclipse and planet parade