Lawyer embarrassed in court after ChatGPT messes up legal research

The lawyer said that he "greatly regrets" relying on the chatbot, and was "unaware that its content could be false"

A US lawyer is facing a court hearing of his own as his firm used the AI chatbot, ChatGPT for legal research.

A New York lawyer found himself in hot waters when the judge informed the lawyer that "a filing was found to reference example legal cases that did not exist".

The lawyer who used the tool told the court he was "unaware that its content could be false," BBC reported Sunday.

ChatGPT is an AI-operated chatbot that creates original text on request, but also warns users that it can "produce inaccurate information".

Originally, the case was about a man who sued an airline over an alleged personal injury and his legal team had submitted a brief that cited several previous court cases in an attempt to prove, using precedent, why the case should move forward, the report said.

However, according to the airline's lawyers, they could not find several of the cases that were referenced in the brief.

"Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations," Judge Castel wrote, ordering the man's legal team to explain itself.

After several filings, it was discovered that Peter LoDuca, the lawyer for the plaintiff, had not prepared the research but his colleague, Steven A Schwartz, from the same law firm, had used ChatGPT to look for similar previous cases.

Schwartz, who has been an attorney for more than 30 years, clarified in his statement: "Mr LoDuca had not been part of the research and had no knowledge of how it had been carried out."

He added that he "greatly regrets" relying on the chatbot, which he said he had never used for legal research before and was "unaware that its content could be false".

He has sworn never again to "supplement" his legal research with AI "without absolute verification of its authenticity".

At a hearing scheduled for 8 June, both solicitors from the company Levidow, Levidow & Oberman have been ordered by the judge to defend their conduct.

Since its debut in November 2022, ChatGPT has been used by millions of users. It is designed to imitate various writing styles and respond to queries in language that seems natural and human.

Previously, concerns have been raised at government-level, about the possible dangers of artificial intelligence (AI), including the possibility of bias and false information spreading.

-

Astronauts face life threatening risk on Boeing Starliner, NASA says

-

Giant tortoise reintroduced to island after almost 200 years

-

Blood Falls in Antarctica? What causes the mysterious red waterfall hidden in ice

-

Scientists uncover surprising link between 2.7 million-year-old climate tipping point & human evolution

-

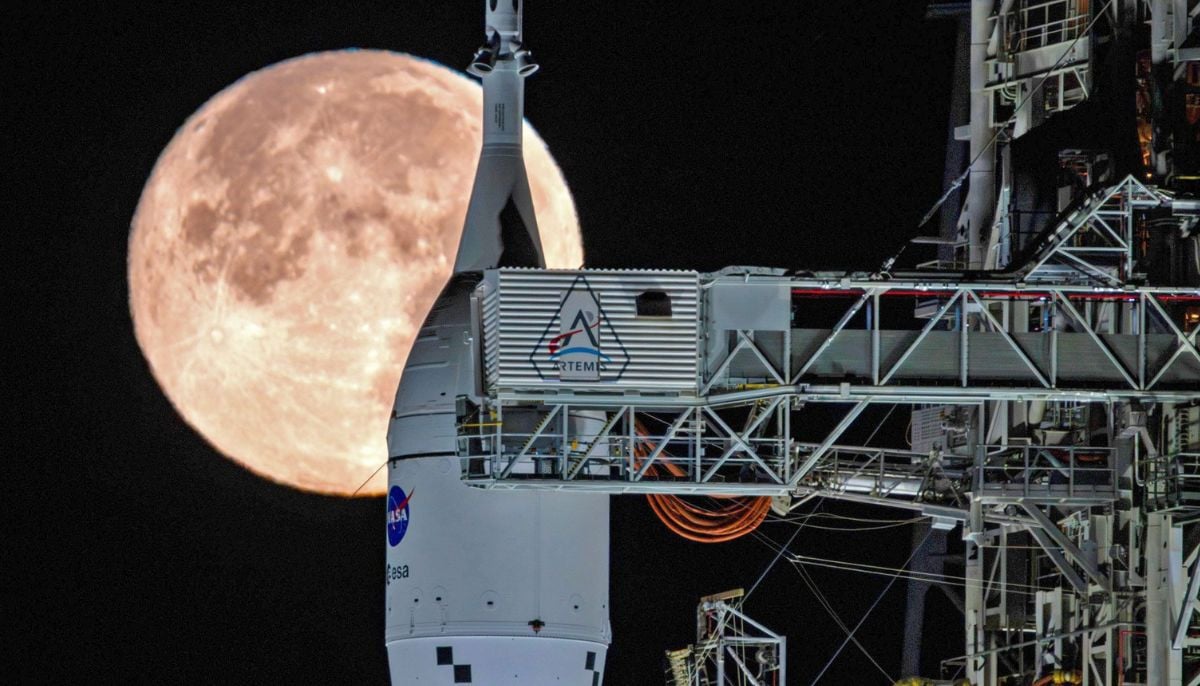

NASA takes next step towards Moon mission as Artemis II moves to launch pad operations following successful fuel test

-

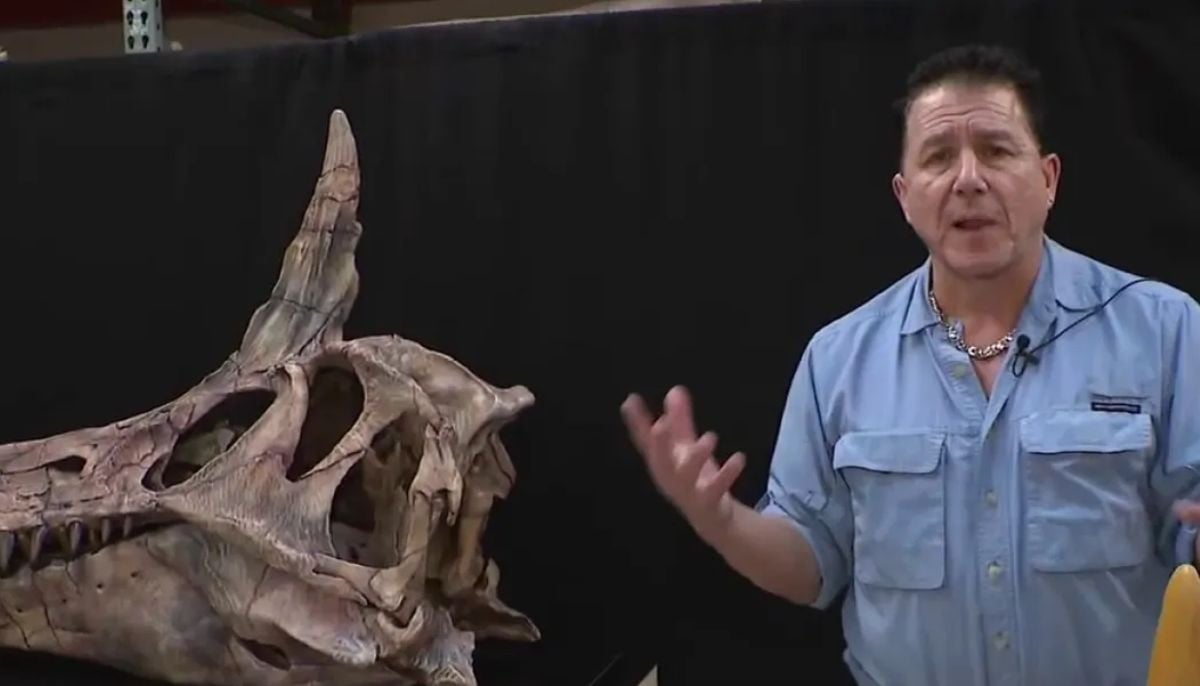

Spinosaurus mirabilis: New species ready to take center stage at Chicago Children’s Museum in surprising discovery

-

Climate change vs Nature: Is world near a potential ecological tipping point?

-

125-million-year-old dinosaur with never-before-seen spikes stuns scientists in China