Google flags child's groin images taken for doctor as sexual abuse material

To father's shock, video on his phone with his son was flagged too which was used by San Francisco police department to open investigation into him

Google has refused to give back a man his account after it flagged his son's medical images as "child sexual abuse material" (CSAM), The Guardian reported quoting the New York Times.

Experts have said that it is inevitable for technology to behave in such a manner. They have been warning about the limitations of automatic detection of child sexual abuse media.

Since giants like google have a tremendous amount of private data, they are under the pressure to use technology to deal with the consequent problems.

The man was identified as Mark by the NYT. He had taken pictures of his son's groin to show it to a doctor. The image was then used by the doctor to diagnose the child and prescribe him antibiotics.

Since the photos were automatically uploaded to the cloud, Google marked them as CSAM.

Two days later, Mark lost access to all his Google accounts including his phone service Fi.

He was told his content was “a severe violation of the company’s policies and might be illegal”.

To Mark's shock, another video on his phone with his son was flagged which was then used by the San Francisco police department to open an investigation into him.

While Mark was legally cleared, Google has refused to back off and reinstate his account.

A Google spokesperson said that their technology detected only things that US law defines as CSAM.

Daniel Kahn Gillmor, a senior staff technologist at the ACLU, said that this was just one example of how systems like these can harm people, reported The Guardian.

Algorithms have multiple limitations. One of them is the inability to differentiate between images taken for sexual abuse and for medical purposes.

“These systems can cause real problems for people,” he said.

-

Startup aims to brighten night skies with space mirrors

-

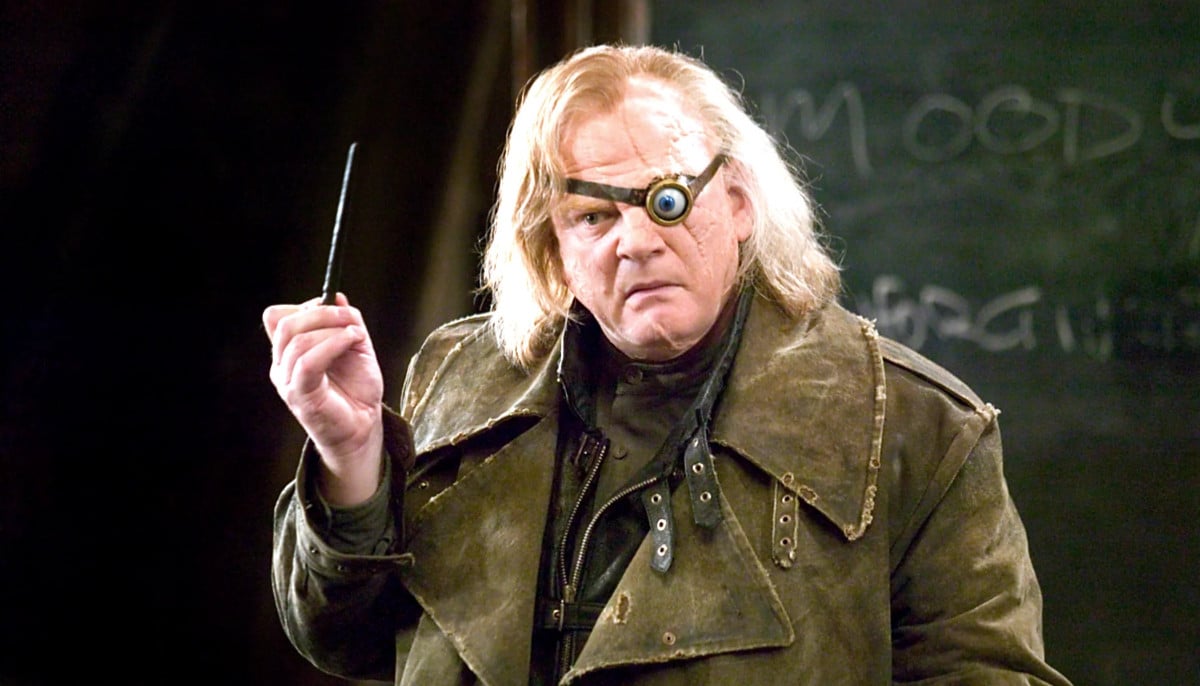

'Harry Potter' star Brendan Gleeson reluctantly addresses JK Rowling's trans views

-

Bamboo: World’s next sustainable ‘superfood’ hiding in plain sight

-

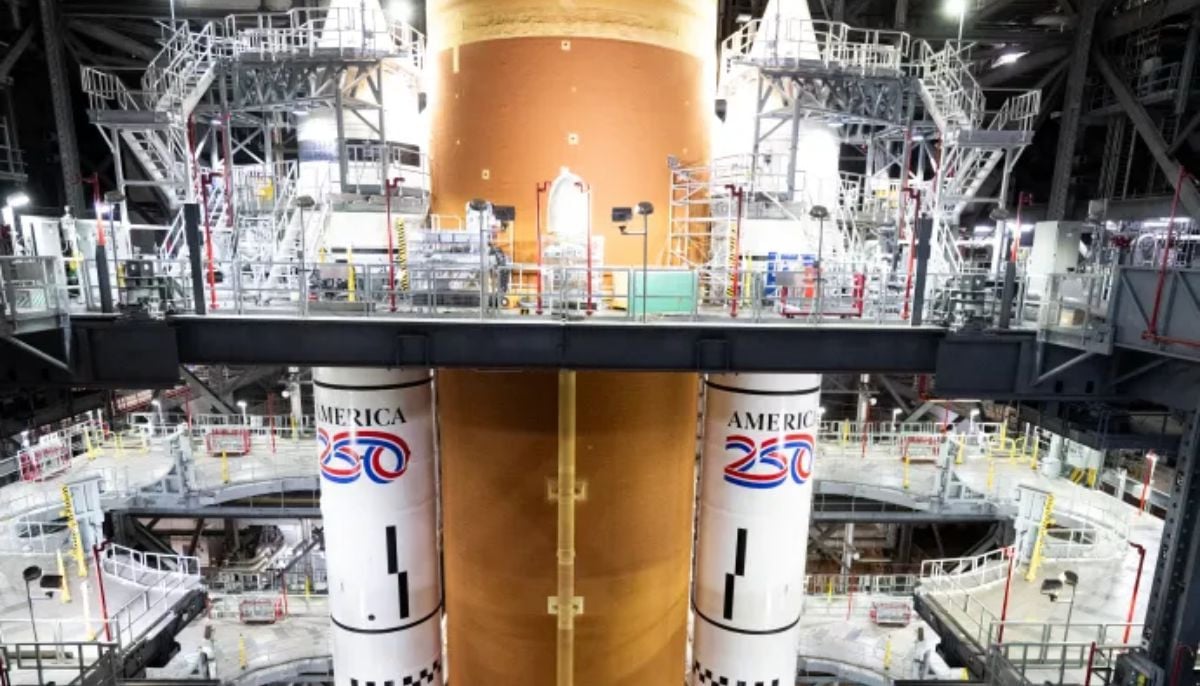

NASA Artemis II rocket heads to the launch pad for a historic crewed mission to the Moon

-

Blood Moon: When and where to watch in 2026

-

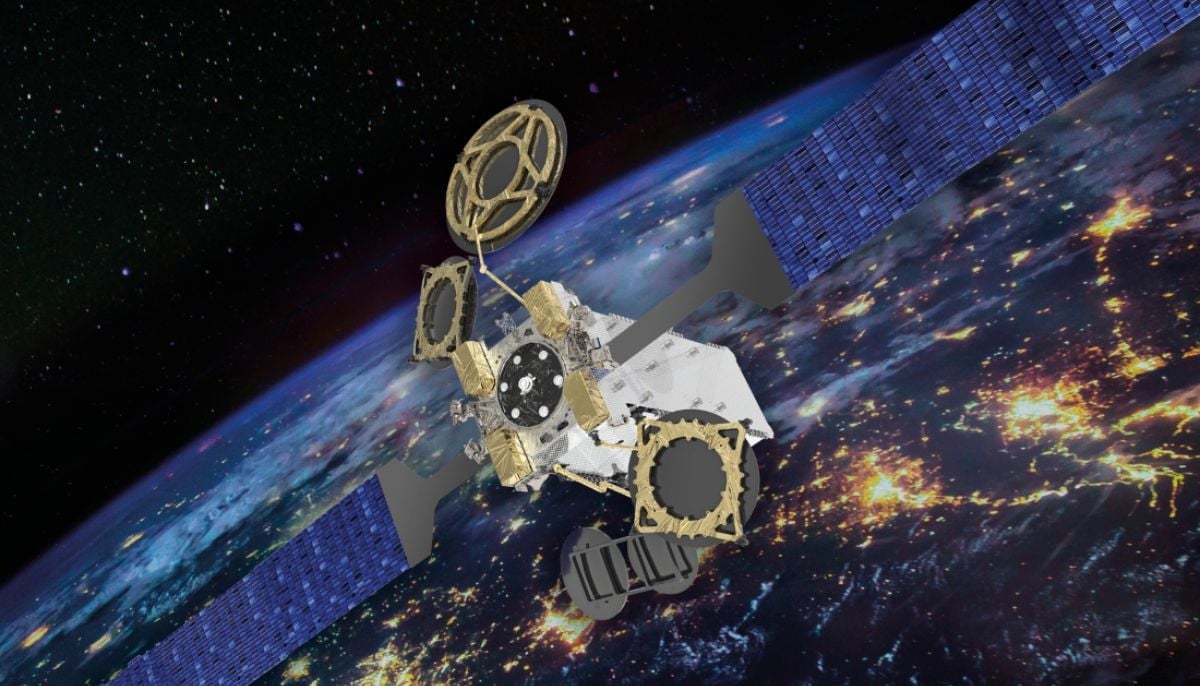

Elon Musk’s Starlink rival Eutelsat partners with MaiaSpace for satellite launches

-

Blue Moon 2026: Everything you need to know

-

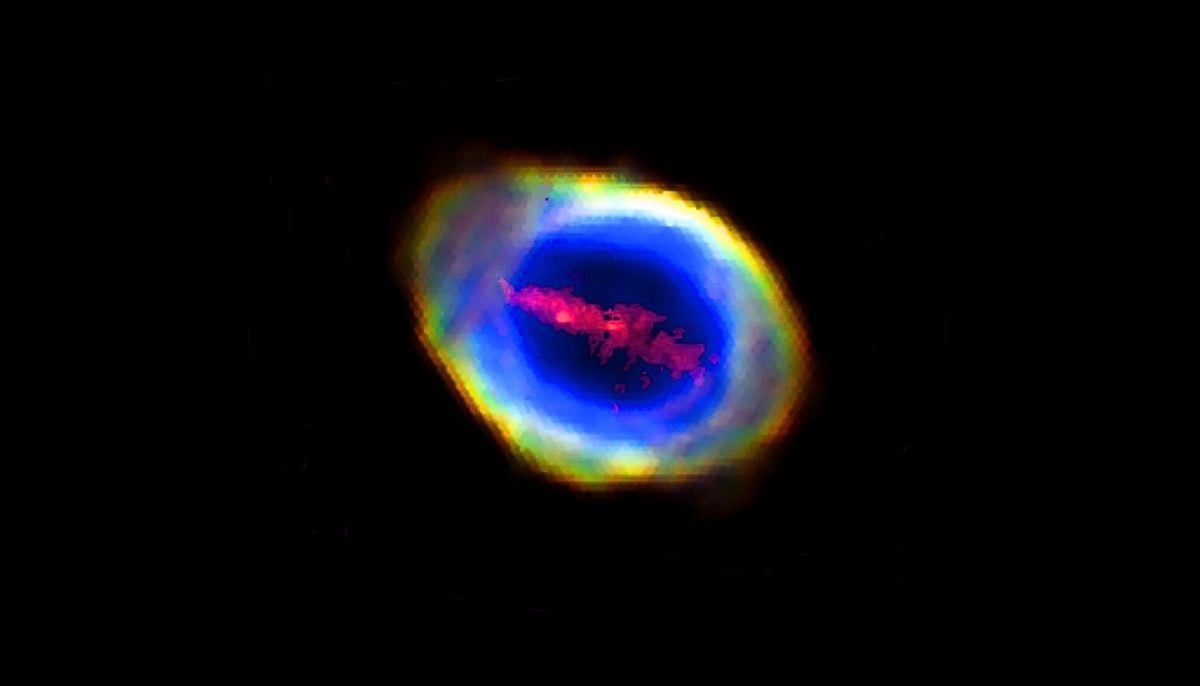

Scientists unravel mystery of James Webb’s ‘little red dots’ in deep space