Apple reveals Eye Tracking, other new features for iPhone, iPad

Tim Cook's American tech giant aims to provide "best possible" experience to users

Apple Wednesday revealed new accessibility capabilities that will be available later in the year in which Eye Tracking is the most prominent one.

Eye Tracking enables people with physical limitations to operate an iPad or iPhone using their eyes.

In addition, more accessibility features will be added to visionOS; Vocal Shortcuts will enable users to complete tasks by creating their own unique sound; Vehicle Motion Cues will lessen motion sickness when using an iPhone or iPad while driving; and Music Haptics will provide a new way for users who are deaf or hard of hearing to experience music through the iPhone's Taptic Engine.

With the help of Apple silicon, AI, and machine learning, these features combine the power of Apple software and hardware to promote the company's decades-long goal of creating products that are accessible to all.

“We believe deeply in the transformative power of innovation to enrich lives,” said Tim Cook, Apple’s CEO.

“That’s why for nearly 40 years, Apple has championed inclusive design by embedding accessibility at the core of our hardware and software. We’re continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users.”

-

Astronauts face life threatening risk on Boeing Starliner, NASA says

-

Giant tortoise reintroduced to island after almost 200 years

-

Blood Falls in Antarctica? What causes the mysterious red waterfall hidden in ice

-

Scientists uncover surprising link between 2.7 million-year-old climate tipping point & human evolution

-

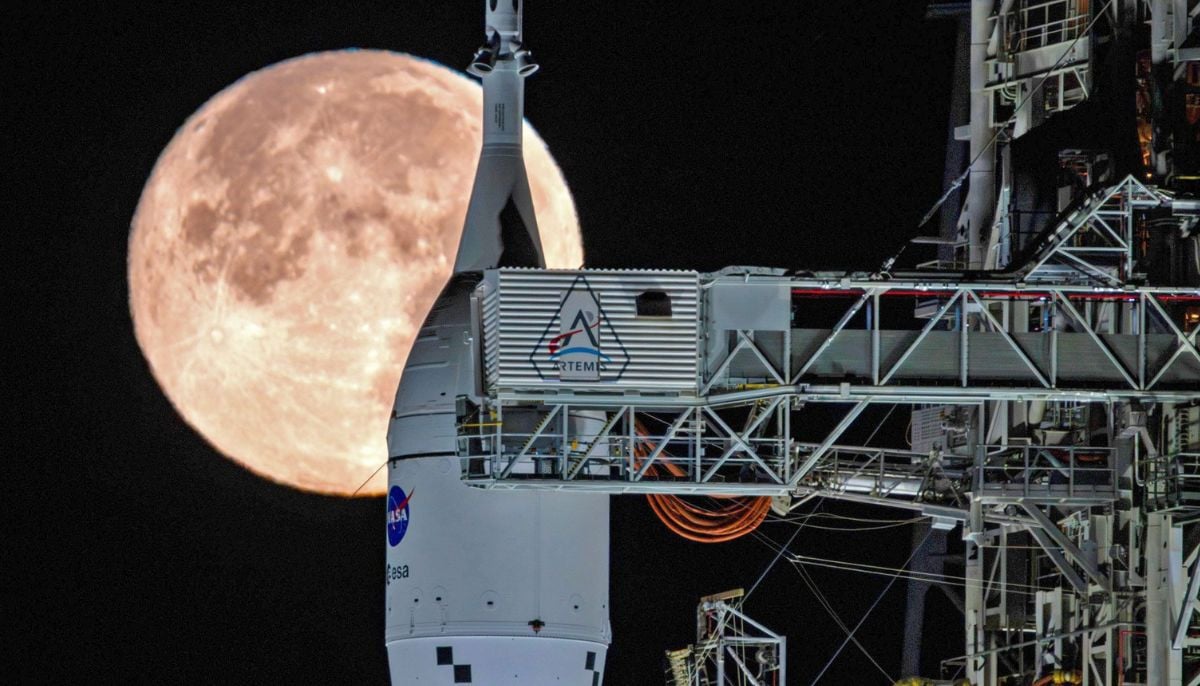

NASA takes next step towards Moon mission as Artemis II moves to launch pad operations following successful fuel test

-

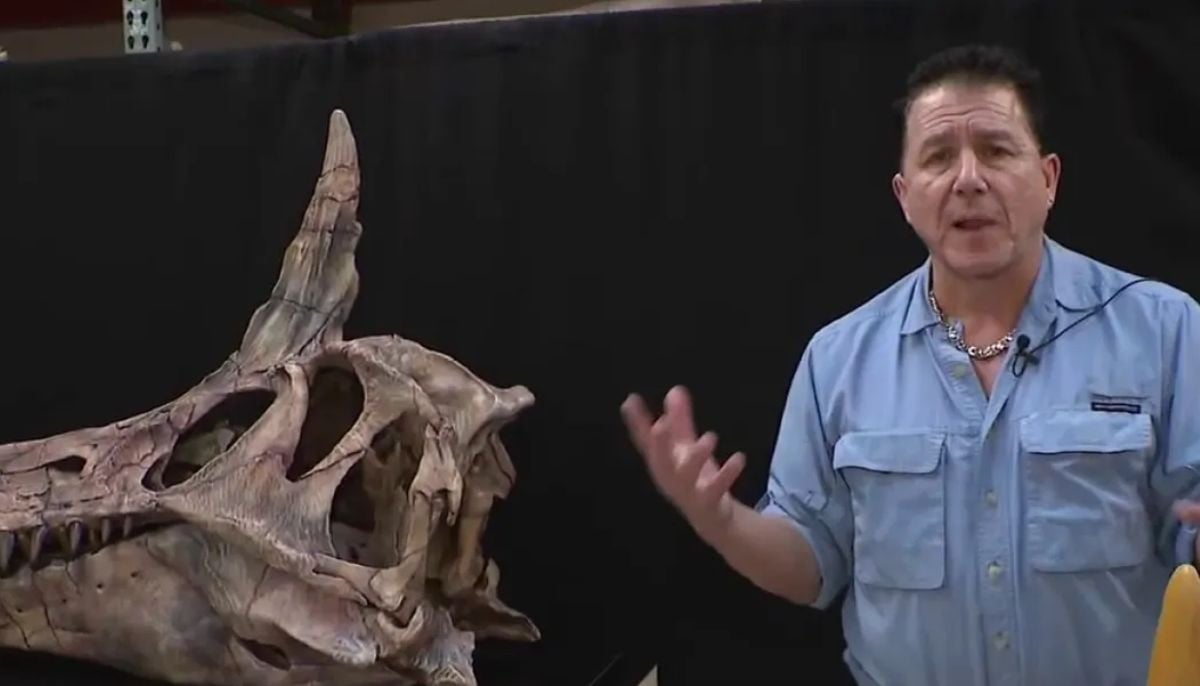

Spinosaurus mirabilis: New species ready to take center stage at Chicago Children’s Museum in surprising discovery

-

Climate change vs Nature: Is world near a potential ecological tipping point?

-

125-million-year-old dinosaur with never-before-seen spikes stuns scientists in China