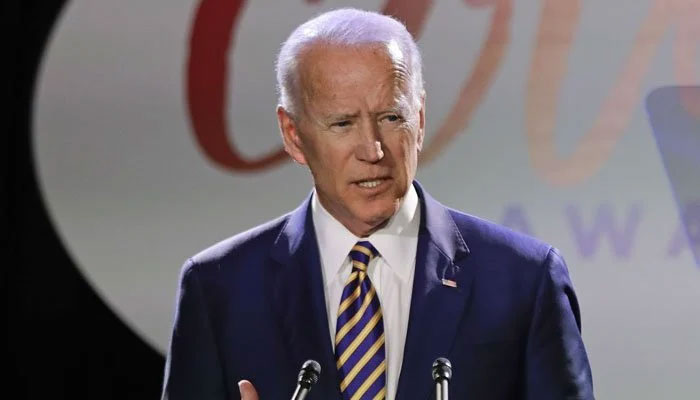

Biden holds talks with Microsoft and Google CEOs on risks posed by AI

AI usage sparks concerns of privacy violations, biased hiring, scams, and misinformation campaigns

President Joe Biden recently attended a White House meeting with CEOs of major artificial intelligence (AI) companies, including Google and Microsoft, to discuss the potential risks and necessary safeguards of AI.

This technology has become increasingly popular in recent years, with apps like ChatGPT capturing the public's attention and leading many companies to launch similar products that could revolutionize the way we work.

However, as more people use AI for tasks like medical diagnoses and creating legal briefs, concerns have grown about privacy violations, employment bias, and the potential for scams and misinformation campaigns.

The two-hour meeting also included Vice President Kamala Harris and several administration officials. Harris acknowledged the potential benefits of AI but expressed concerns about safety, privacy, and civil rights. She called on the AI industry to ensure their products are safe, and the administration is open to new regulations and legislation to address AI's potential harms.

The meeting resulted in the announcement of a $140 million investment from the National Science Foundation to create seven new AI research institutes, and leading AI developers will participate in a public evaluation of their AI systems.

Although the Biden administration has taken some steps to address AI-related issues, such as signing an executive order to eliminate bias in AI use and releasing an AI Bill of Rights and risk management framework, some experts argue that the US has not done enough to regulate AI.

The Federal Trade Commission and the Department of Justice's Civil Rights Division have recently announced their intent to use legal authorities to combat AI-related harm. However, it remains to be seen whether the US will adopt the same tough approach as European governments in regulating technology and deepfakes and misinformation.

-

'We were deceived': Noam Chomsky's wife regrets Epstein association

-

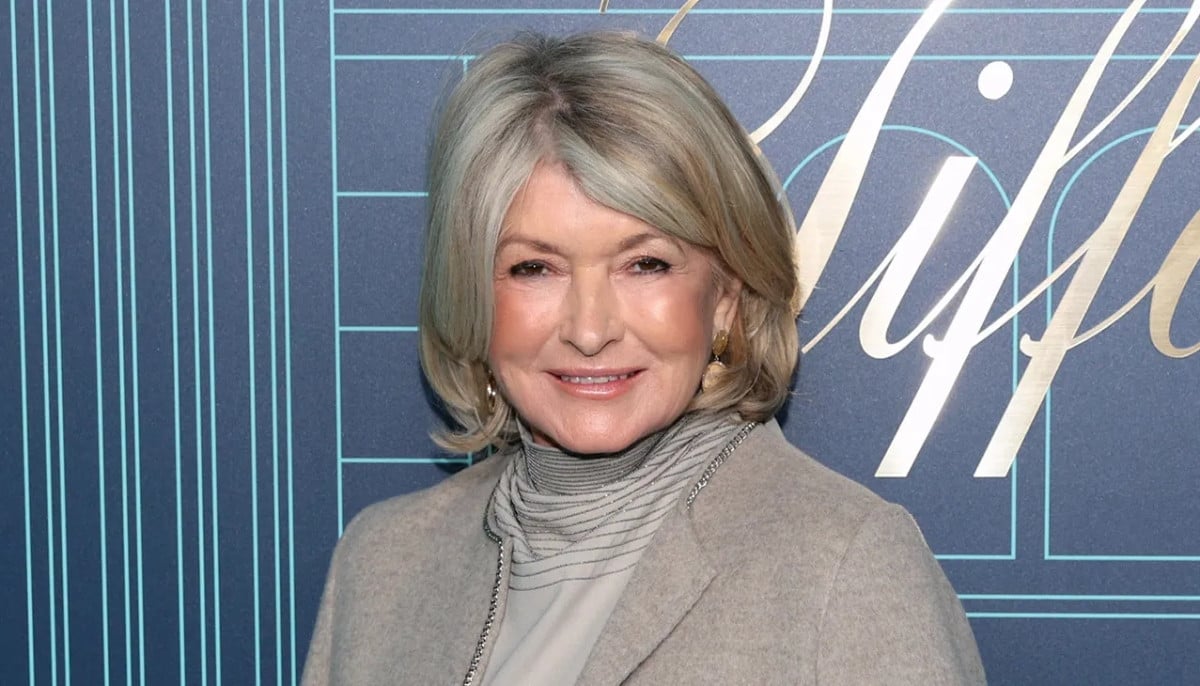

Martha Stewart on surviving rigorous times amid upcoming memoir release

-

18-month old on life-saving medication returned to ICE detention

-

Cardi B says THIS about Bad Bunny's Grammy statement

-

Chicago child, 8, dead after 'months of abuse, starvation', two arrested

-

Funeral home owner sentenced to 40 years for selling corpses, faking ashes

-

Australia’s Liberal-National coalition reunites after brief split over hate laws

-

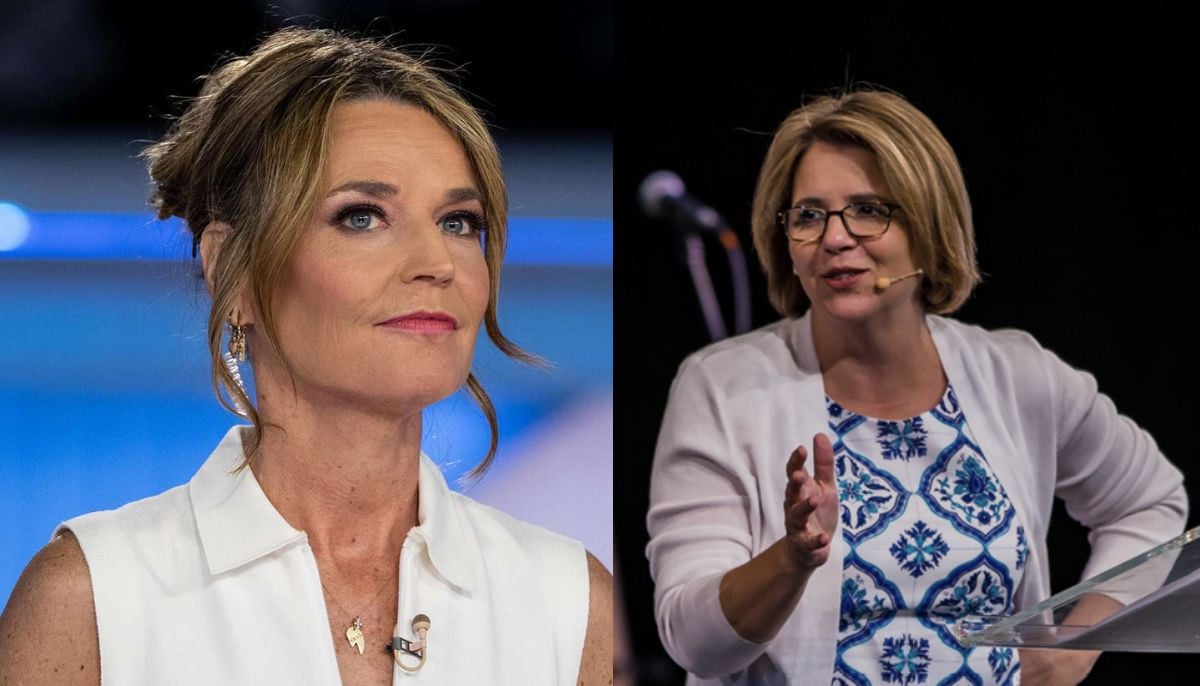

Savannah Guthrie addresses ransom demands made by her mother Nancy's kidnappers