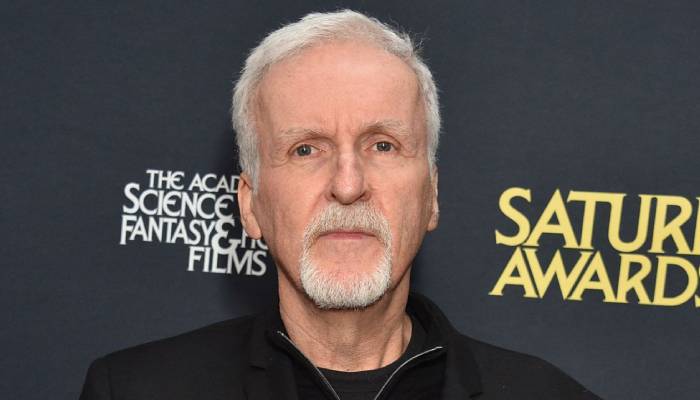

James Cameron addresses the dangers of AI gaining over weapons systems

Oscar winner movie-maker discusses AI as he announces new Hiroshima project

Hollywood director James Cameron has recently opened up about the dangers of artificial intelligence (AI) gaining control over weapons systems.

The Titanic movie-maker spoke to Rolling Stone while promoting the book release of Ghosts of Hiroshima, which he also plans on adapting into a movie.

During the interview, James noted that an arms race relying on AI is a dangerous thing and it can lead to a “Terminator-style apocalypse” in the future.

“I do think there’s still a danger of a Terminator-style apocalypse where you put AI together with weapons systems, even up to the level of nuclear weapon systems, nuclear defense counterstrike, all that stuff,” said the Oscar winner director.

James pointed out that the “theatre of operations is so rapid, the decision windows are so fast, it would take a superintelligence to be able to process it, and maybe we’ll be smart and keep a human in the loop”.

However, the Avatar movie-maker mentioned that humans are “fallible” and there have been a “lot of mistakes made that have put us right on the brink of international incidents that could have led to nuclear war”.

James told the outlet, “I feel like we’re at this cusp in human development where you’ve got the three existential threats: climate and our overall degradation of the natural world, nuclear weapons, and superintelligence.”

“They’re all sort of manifesting and peaking at the same time. Maybe the superintelligence is the answer,” he concluded.

-

Macaulay Culkin recall changing legal name in 2019

-

Kerry Katona reveals unexpected run in with Brian McFadden’s ex wife

-

‘Staying Alive’ star Finola Hughes makes surprising public appearance

-

‘Hamnet’ receives widely positive reviews following its early release

-

Alexa Demie makes vulnerable confession about 'Euphoria' character

-

Rebel Wilson breaks silence on shocking sexual harassment claim

-

Jonathan Bailey gets seal of approval from 'Wicked' costar Bowen Yang

-

Paul Mescal gives rare insights into Beatles biopic with Saoirse Ronan